rlPGAgent

Policy gradient (PG) reinforcement learning agent

Description

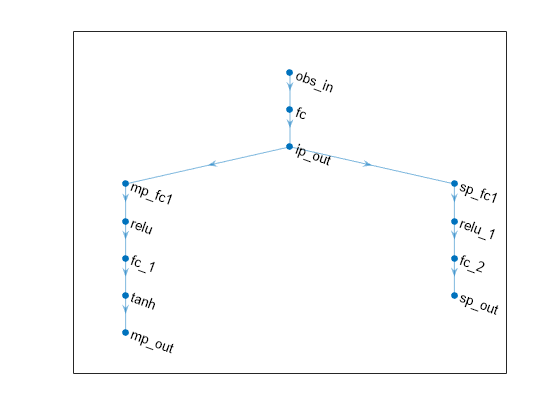

The policy gradient (PG) algorithm is a on-policy reinforcement learning method for environments with a discrete or continuous action space. A policy gradient agent uses the REINFORCE algorithm to directly estimate a stochastic policy. As REINFORCE belongs to the class of Monte Carlo methods, learning occurs only after an episode is finished. For continuous action spaces, this agent does not enforce constraints set in the action specification; therefore, if you need to enforce action constraints, you must do so within the environment. As this algorithm belongs to the class of Monte Carlo methods, the agent does not learn during an episode but only after an episode is finished.

For more information on PG agents and the REINFORCE algorithm, see REINFORCE Policy Gradient (PG) Agent. For more information on the different types of reinforcement learning agents, see Reinforcement Learning Agents.

Creation

Syntax

Description

Create Agent from Observation and Action Specifications

agent = rlPGAgent(observationInfo,actionInfo)observationInfo and the action specification

actionInfo. The ObservationInfo and

ActionInfo properties of agent are set to

the observationInfo and actionInfo input

arguments, respectively.

agent = rlPGAgent(observationInfo,actionInfo,initOpts)initOpts object. For

more information on the initialization options, see rlAgentInitializationOptions.

Create Agent from Actor and Critic

agent = rlPGAgent(actor)UseBaseline property of the agent is false in

this case.

Specify Agent Options

agent = rlPGAgent(___,agentOptions)AgentOptions

property to the agentOptions input argument. Use this syntax after

any of the input arguments in the previous syntaxes.

Input Arguments

Properties

Object Functions

train | Train reinforcement learning agents within a specified environment |

sim | Simulate trained reinforcement learning agents within specified environment |

getAction | Obtain action from agent, actor, or policy object given environment observations |

getActor | Extract actor from reinforcement learning agent |

setActor | Set actor of reinforcement learning agent |

getCritic | Extract critic from reinforcement learning agent |

setCritic | Set critic of reinforcement learning agent |

generatePolicyFunction | Generate MATLAB function that evaluates policy of an agent or policy object |

Examples

Tips

For continuous action spaces, the

rlPGAgentagent does not enforce the constraints set by the action specification, so you must enforce action space constraints within the environment.

Version History

Introduced in R2019a

See Also

Apps

Functions

getAction|getActor|getCritic|getModel|generatePolicyFunction|generatePolicyBlock|getActionInfo|getObservationInfo

Objects

rlPGAgentOptions|rlAgentInitializationOptions|rlQValueFunction|rlDiscreteCategoricalActor|rlContinuousGaussianActor|rlACAgent|rlDDPGAgent