NARMAX Model Identification

This topic describes some ways to identify nonlinear autoregressive moving average with exogenous inputs (NARMAX) models. These models are currently not available in the System Identification Toolbox™ software. However, this topic shows how to identify NARMAX models using the available model structures and their training algorithms by preparing a finite-horizon prediction algorithm.

Linear Autoregressive Models

NARMAX models are nonlinear counterparts of the linear autoregressive models that are popular structures used to forecast the outcomes of time series. To understand the structure of a NARMAX model, it is helpful to review the simpler, linear versions first.

AR Models

The simplest form of a linear autoregressive model (AR) is

or

where is a polynomial in the shift operator q-1, that is, q-iy(k) = y(k-i).

The expression y(k) describes the time series under observation and e(k) is the disturbance process. Thus, y(k) is generated by a weighted sum of its own past values but is corrupted by the noise, e(k). This is called the data-generating system, also known as the true system. In engineering applications, y(k) is typically obtained by sampling a signal of physical origin, y(t), such as vibrations measured using an accelerometer. When y(t) is sampled uniformly using a sampling interval (sample time) of Ts, it leads to the measurements y(Ts),y(2Ts),...,y(NTs), where k = 1,...,N are the sampling instants. Typically, e(t) is assumed to be a zero-mean independent and identically distributed Gaussian process. Then, given the measurements y(k), k = 1,2,...,T, the prediction of the response at the next time instant, T + 1, is given by

This equation is called the one-step-ahead predictor for the process y(k). The equation predicts the value of y exactly one step into the future.

ARX Models

When exogenous but measurable influences, u(k), affect y(k), the data-generating system equation is

or more succinctly

where and

This model is called an autoregressive with exogenous inputs (ARX) model. Some observations regarding the ARX model structure are:

u(k) is supposed to be known or measured with no noise. That is, practically, any measurement errors in u(k) are combined into the fictitious noise source e(k).

The model regressors are the lagged variables y(k-1),y(k-2),...,u(k),u(k-1),u(k-2),.... At time instant k, the regressors are fully known.

In engineering applications, y(t) is referred to as an output signal and u(t) as an input signal. Thus, the data-generating system equation is an input-output model.

The one-step-ahead predictor takes the form

You can also express an ARX model in the state-space form by defining the states using the lagged variables.

ARMA Models

The predictor of the AR or ARX model is a static equation, that is, the terms on the right-hand side of the predictor equations are fully known at the time instant k without needing to look up their past values. However, when the output in the data-generating system is affected by not just the current value of the disturbance e(k) but also its past values (e(k-1),e(k-2), and so on), the equation gets more complicated. For example, the form of an autoregressive with moving average (ARMA) model is

Here, the one-step-ahead prediction of y(k) is not as straightforward as in the ARX model. To estimate ỹ(k + 1), you need to know the past predictions (ỹ(k),ỹ(k-1),...).

An ARMA predictor takes the form

which can be rearranged to

This equation is an autoregressive equation. That is, you do not know the value of ỹ(k) in advance. Only the initial value or initial guess for ỹ(1) is available. You must simulate this model by using the initial guess ỹ(1) to solve for ỹ(2), and then use the values ỹ(1),ỹ(2) to solve for ỹ(3), and so on.

ARMAX Models

When exogenous inputs are present, the ARMA model becomes an ARMAX model. The form of an ARMAX model is

Nonlinear Autoregressive Models

From the predictor for the ARX model, note that:

The regressors are all lagged versions of the measured variables y(k) and u(k) with no other transformations.

The prediction uses a weighted sum of the regressors.

NARX Models

For a nonlinear extension of the linear ARX predictor:

In place of linear regressors (y(k - 1),y(k - 2),...,u(k - 1),...), you can use nonlinear transformations such as polynomials or trigonometric transformations of the lagged variables, for example, y(k - 1)2, y(k - 4)u(k - 6), sin(3u(t - 5)), |u(t)|3,..., and so on.

You can replace the simple weighted sum with a more complicated nonlinear map such as sigmoids or wavelets.

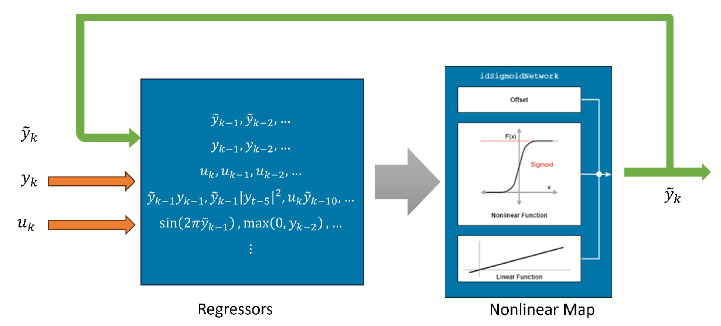

Nonlinear ARX (NARX) models facilitate such extensions. The general form of these models is

where r1(k),r2(k),... are the model regressors. For example, r1(k) = y(k-1), r2(k) = y(k-5)2, r3(k) = sin(2πu(k)), and so on. A simpler notation for the NARX models is

where it is understood that the lagged variables can undergo additional transformations (polynomial, trigonometric, and so on) before being passed as input arguments to the mapping function, .

You represent the NARX models with idnlarx objects and train them

using the nlarx command.

NARMAX Models

Similar to the linear case, you can extend the NARX model to allow for dependence on the past prediction errors leading to the NARMAX model form

In this form, you can view e(k - 1),e(k - 2),... as extra regressors whose values are not known directly. At time k, e(k - i) = y(t - i) - ỹ(t - i), so the form of the one-step-ahead predictor is

Because the predictions depend on their own past values, to determine ỹ(k + 1), you must simulate the model. Start at ỹ(1) and march forward to the current time instant k. This simulation makes the prediction more time-consuming. Also, determining by model training is more complex due to the recursive nature of the prediction.

NARMAX Models with Linear and Additive Moving Average Term. Consider the data-generating system

Here, the contribution of the error terms is written as a separate additive term C(q)e(k) = e(k) + c1e(k - 1) + c2e(k - 2) + .... The one-step-ahead predictor is

You can rearrange the predicted quantities to

where C(q) = 1 + c1q-1 + c2q-2 + .... This equation represents an autoregressive model for the process ỹ(k + 1). This equation is driven by the inputs y(k) and u(k) which are assumed to be known at time instant k. For training this model using input and output measurements, {u(k), y(k)}, k = 1,2,...,N, you need to determine the values of the coefficients (such as weights and biases) used by the nonlinear function, , and the coefficients c1,c2,... used by the moving average terms.

Generic NARMAX Models. In general, you do not know the nature of the dependence of the output y(k) on the error terms e(k - 1),e(k - 2),.... Using e(k) = y(k) - ỹ(k), a generic form of the NARMAX predictor is:

This model uses the measured processes y(k) and u(k) as inputs and generates ỹ(k) as the response. So you can approximate the function, , using a black-box model. You can use neural networks as generic forms of the function using either nonlinear ARX models or neural state-space models.

For a detailed example that shows how to generate NARMAX models using these model structures, see Generate NARMAX Models.

See Also

armax | idNeuralNetwork | idnlarx | nlarx | nlarxOptions | predict | iddata | linearRegressor | getreg | resid | nlgreyest | nlgreyestOptions | idnlgrey | createMLPNetwork | idNeuralStateSpace | nlssest | compare | pe