Choose a Sensor for Unreal Engine Simulation

In Automated Driving Toolbox™, you can obtain high-fidelity sensor data from a virtual environment. This environment is rendered using the Unreal Engine® from Epic Games®.

Simulating models in the 3D visualization environment requires Simulink® 3D Animation™.

The table summarizes the sensor blocks that you can simulate in this environment.

| Sensor Block | Description | Visualization | Example |

|---|---|---|---|

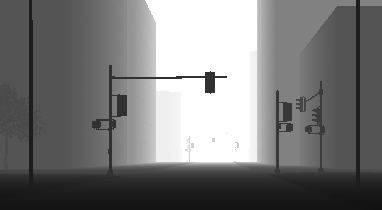

| Display camera images by using a Video Viewer or To Video Display block. Sample visualization:

| Design Lane Marker Detector Using Unreal Engine Simulation Environment | |

Display depth maps by using a Video Viewer or To Video Display block. Sample visualization:

| Depth and Semantic Segmentation Visualization Using Unreal Engine Simulation | ||

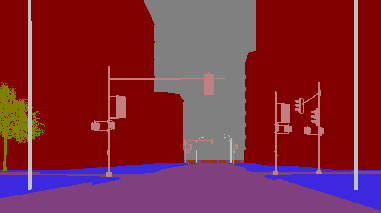

Display semantic segmentation maps by using a Video Viewer or To Video Display block. Sample visualization:

| Depth and Semantic Segmentation Visualization Using Unreal Engine Simulation | ||

| Display camera images by using a Video Viewer or To Video Display block. Sample visualization:

| Simulate Simple Driving Scenario and Sensor in Unreal Engine Environment | |

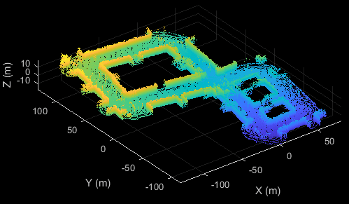

| Display point cloud data by using

| Design Lidar SLAM Algorithm Using Unreal Engine Simulation Environment | |

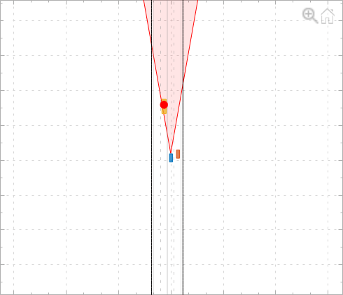

Display lidar coverage areas and detections by using the Bird's-Eye Scope. Sample visualization:

| Visualize Sensor Data from Unreal Engine Simulation Environment | ||

| Display radar coverage areas and detections by using the Bird's-Eye Scope. Sample visualization:

| Simulate Vision and Radar Sensors in Unreal Engine Environment Visualize Sensor Data from Unreal Engine Simulation Environment | |

| Simulation 3D Vision Detection Generator |

| Display vision coverage areas and detections by using the Bird's-Eye Scope. Sample visualization:

| Simulate Vision and Radar Sensors in Unreal Engine Environment |

| Simulation 3D Ultrasonic Sensor |

| Display ultrasonic range coverage areas and range detections by

using the Bird's-Eye Scope. Sample visualization: |

See Also

Blocks

- Simulation 3D Scene Configuration | Simulation 3D Vehicle with Ground Following | Simulation 3D Probabilistic Radar Configuration