YAMNet

Libraries:

Audio Toolbox /

Deep Learning

Description

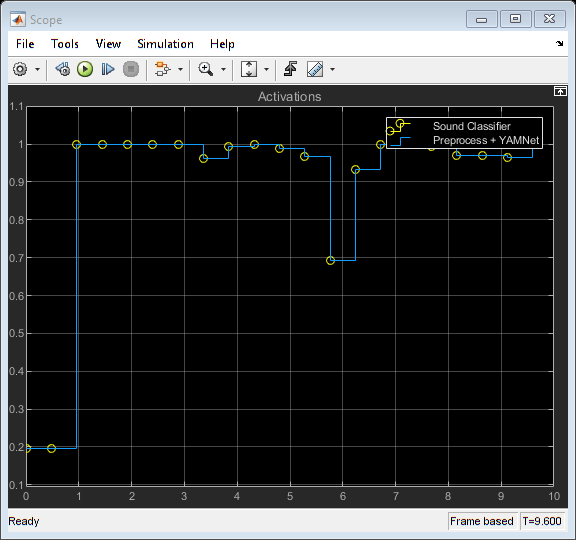

The YAMNet block leverages a pretrained sound classification network that is trained on the AudioSet dataset to predict audio events from the AudioSet ontology.

Examples

Ports

Input

Output

Parameters

Block Characteristics

Data Types |

|

Direct Feedthrough |

|

Multidimensional Signals |

|

Variable-Size Signals |

|

Zero-Crossing Detection |

|

Algorithms

References

[1] Gemmeke, Jort F., Daniel P. W. Ellis, Dylan Freedman, Aren Jansen, Wade Lawrence, R. Channing Moore, Manoj Plakal, and Marvin Ritter. “Audio Set: An Ontology and Human-Labeled Dataset for Audio Events.” 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, 2017, pp. 776–80. DOI.org (Crossref), doi:10.1109/ICASSP.2017.7952261.

[2] Hershey, Shawn, Sourish Chaudhuri, Daniel P. W. Ellis, Jort F. Gemmeke, Aren Jansen, R. Channing Moore, Manoj Plakal, et al. “CNN Architectures for Large-Scale Audio Classification.” 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, 2017, pp. 131–35. DOI.org (Crossref), doi:10.1109/ICASSP.2017.7952132.

Extended Capabilities

Version History

Introduced in R2021b