Train DDPG Agent with Pretrained Actor Network

This example shows how to train a deep deterministic policy gradient (DDPG) agent for lane keeping assist (LKA) in Simulink®. To make training more efficient, the actor of the DDPG agent is initialized with a deep neural network that was previously trained using supervised learning. This actor is trained in the Imitate MPC Controller for Lane Keeping Assist example.

For more information on DDPG agents, see Deep Deterministic Policy Gradient (DDPG) Agent.

Fix Random Number Stream for Reproducibility

The example code might involve computation of random numbers at various stages. Fixing the random number stream at the beginning of various sections in the example code preserves the random number sequence in the section every time you run it, and increases the likelihood of reproducing the results. For more information, see Results Reproducibility. Fix the random number stream with seed 0 and random number algorithm Mersenne twister. For more information on controlling the seed used for random number generation, see rng.

previousRngState = rng(0,"twister");The output previousRngState is a structure that contains information about the previous state of the stream. You will restore the state at the end of the example.

Simulink Model

The training goal for the lane-keeping application is to keep the ego vehicle traveling along the centerline of the a lane by adjusting the front steering angle. This example uses the same ego vehicle dynamics and sensor dynamics as the Train DQN Agent for Lane Keeping Assist example.

m = 1575; % total vehicle mass (kg) Iz = 2875; % yaw moment of inertia (mNs^2) lf = 1.2; % long. distance from center of gravity to front tires (m) lr = 1.6; % long. distance from center of gravity to rear tires (m) Cf = 19000; % cornering stiffness of front tires (N/rad) Cr = 33000; % cornering stiffness of rear tires (N/rad) Vx = 15; % longitudinal velocity (m/s)

Define the sample time, Ts, and simulation duration, T, in seconds.

Ts = 0.1; T = 15;

The output of the LKA system is the front steering angle of the ego vehicle. Considering the physical limitations of the ego vehicle, constrain its steering angle to the range [-60,60] degrees. Specify the constraints in radians.

u_min = -1.04; u_max = 1.04;

Define the curvature of the road as a constant 0.001().

rho = 0.001;

Set initial values for the lateral deviation (e1_initial) and the relative yaw angle (e2_initial). During training, these initial conditions are set to random values for each training episode.

e1_initial = 0.2; e2_initial = -0.1;

Open the model.

mdl = "rlActorLKAMdl";

open_system(mdl)

Define the path to the RL Agent block within the model.

agentblk = mdl + "/RL Agent";Create Environment

Create a reinforcement learning environment object for the ego vehicle. To do so, first define the observation and action specifications. These observations and actions are the same as the features for supervised learning used in Imitate MPC Controller for Lane Keeping Assist.

The six observations for the environment are the lateral velocity , yaw rate , lateral deviation , relative yaw angle , steering angle at previous step , and curvature .

obsInfo = rlNumericSpec([6 1],... LowerLimit=-inf*ones(6,1), ... UpperLimit= inf*ones(6,1))

obsInfo =

rlNumericSpec with properties:

LowerLimit: [6×1 double]

UpperLimit: [6×1 double]

Name: [0×0 string]

Description: [0×0 string]

Dimension: [6 1]

DataType: "double"

obsInfo.Name = "observations";The action for the environment is the front steering angle. Specify the steering angle constraints when creating the action specification object.

actInfo = rlNumericSpec([1 1], ... LowerLimit=u_min, ... UpperLimit=u_max); actInfo.Name = "steering";

In the model, the Signal Processing for LKA block creates the observation vector signal, computes the reward function, and calculates the stop signal.

The reward , provided at every time step , is as follows, where is the control input from the previous time step .

The simulation stops when .

Create the reinforcement learning environment.

env = rlSimulinkEnv(mdl,agentblk,obsInfo,actInfo);

To define the initial condition for lateral deviation and relative yaw angle, specify an environment reset function using an anonymous function handle. The localResetFcn function, which is defined at the end of the example, sets the initial lateral deviation and relative yaw angle to random values.

env.ResetFcn = @(in)localResetFcn(in);

Create DDPG Agent

DDPG agents use a parametrized deterministic policy over continuous action spaces, which is learned by a continuous deterministic actor, and a parametrized Q-value function approximator to estimate the value of the policy. Use neural networks to model both the parametrized policy within the actor and the Q-value function within the critic. For this example, use the helper functions createLaneKeepingCritic and createLaneKeepingActor to create the critic and the actor, along with their training options sets.

[critic,criticOpts] = createLaneKeepingCritic(obsInfo,actInfo); [actor,actorOpts] = createLaneKeepingActor(obsInfo,actInfo);

These initial actor and critic networks have random initial parameter values.

Specify the DDPG agent options using rlDDPGAgentOptions, include the training options for the actor and critic.

agentOptions = rlDDPGAgentOptions(... SampleTime=Ts,... ActorOptimizerOptions=actorOpts,... CriticOptimizerOptions=criticOpts,... ExperienceBufferLength=1e6,... MiniBatchSize=256,... TargetSmoothFactor=1e-3);

You can also set or modify the agent options using dot notation.

agentOptions.NoiseOptions.StandardDeviation = 0.3;

% set the MeanAttractionConstant to 1/Ts for Gaussian action noise.

agentOptions.NoiseOptions.MeanAttractionConstant = 1/Ts;Create the DDPG agent using the specified actor and critic objects and the agent options. For more information, see rlDDPGAgent.

agent = rlDDPGAgent(actor,critic,agentOptions);

Alternatively, you can also create the agent first, and then access its option object, and modify the options, using dot notation.

Train Agent

Fix the random stream for reproducibility.

rng(0,"twister");As a baseline, train the agent with an actor that has random initial parameters. To train the agent, first specify the training options. For this example, use the following options.

Run training for a maximum of 10,000 episodes, with each episode lasting at most 150 time steps.

Display the training progress in the Reinforcement Learning Training Monitor dialog box.

Stop training when the evaluation statistic exceeds –12.

Save a copy of the agent when the evaluation statistic exceeds –15.

For more information on training options, see rlTrainingOptions.

maxepisodes = 10000; maxsteps = T/Ts; trainingOpts = rlTrainingOptions(... MaxEpisodes=maxepisodes,... MaxStepsPerEpisode=maxsteps,... Verbose=false,... Plots="training-progress",... StopTrainingCriteria="EvaluationStatistic",... StopTrainingValue=-12,... SaveAgentCriteria="EvaluationStatistic",... SaveAgentValue=-15);

Train the agent using the train function. Training is a computationally intensive process that takes several hours to complete. To save time while running this example, load a pretrained agent by setting doTraining to false. To train the agent yourself, set doTraining to true.

doTraining = false; if doTraining % Every 10 episodes evaluate performance of the greedy policy by % running 5 evaluation episodes. evaluator = rlEvaluator(... EvaluationFrequency=10,... NumEpisodes=5); % Train the agent. trainingStats = train(agent,env,trainingOpts,Evaluator=evaluator); else % Load pretrained agent for the example. load("ddpgFromScratch.mat"); end

Train Agent with Pretrained Actor

You can set the actor network of your agent to a deep neural network that has been previously trained. For this example, use the deep neural network from the Imitate MPC Controller for Lane Keeping Assist example. This network was trained to imitate a model predictive controller using supervised learning.

Load the pretrained actor network.

load("imitateMPCNetActorObj.mat","imitateMPCNetObj");

Create an actor representation using the pretrained actor.

supervisedActor = rlContinuousDeterministicActor( ... imitateMPCNetObj, ... obsInfo, ... actInfo);

Check that the network used by supervisedActor is the same one that was loaded. To do so, evaluate both the network and the agent using the same random input observation.

testData = rand(6,1);

Evaluate the deep neural network.

predictImNN = predict(imitateMPCNetObj,testData');

Evaluate the actor.

evaluateRLRep = getAction(supervisedActor,{testData});Compare the results.

error = evaluateRLRep{:} - predictImNNerror = single

0

Create a DDPG agent using the pretrained actor.

agent = rlDDPGAgent(supervisedActor,critic,agentOptions);

Reduce the maximum number of training episodes and train the agent using the train function. To save time while running this example, load a pretrained agent by setting doTraining to false. To train the agent yourself, set doTraining to true.

trainingOpts.MaxEpisodes = 5000; doTraining = false; if doTraining % Every 10 episodes evaluate performance of the greedy policy by % running 5 evaluation episodes. evaluator = rlEvaluator(... EvaluationFrequency=10,... NumEpisodes=5); % Train the agent. trainingStats = train(agent,env,trainingOpts,Evaluator=evaluator); else % Load pretrained agent for the example. load("ddpgFromPretrained.mat"); end

By using the pretrained actor network, the training of the DDPG agent converges to a satisfactory policy in fewer episodes.

Simulate the Trained DDPG Agent

Fix the random stream for reproducibility.

rng(0,"twister");To validate the performance of the trained agent, uncomment the following two lines and simulate it within the environment. For more information on agent simulation, see rlSimulationOptions and sim.

% simOptions = rlSimulationOptions(MaxSteps=maxsteps); % experience = sim(env,agent,simOptions);

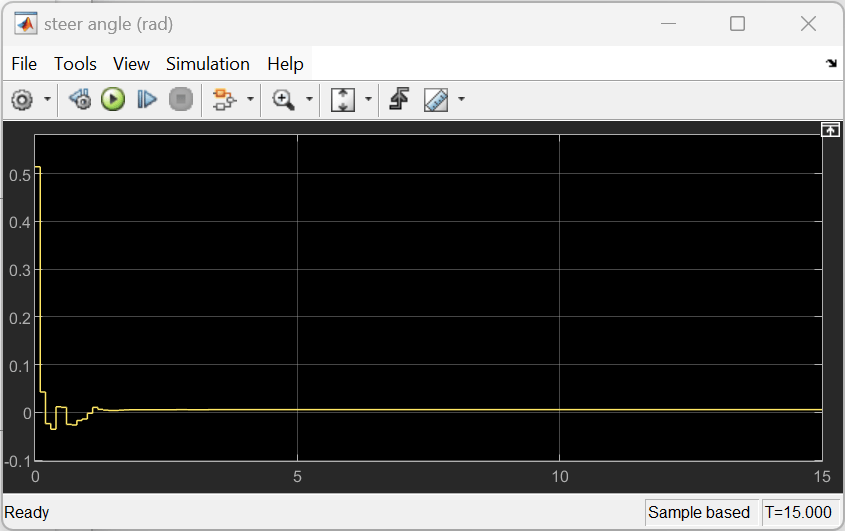

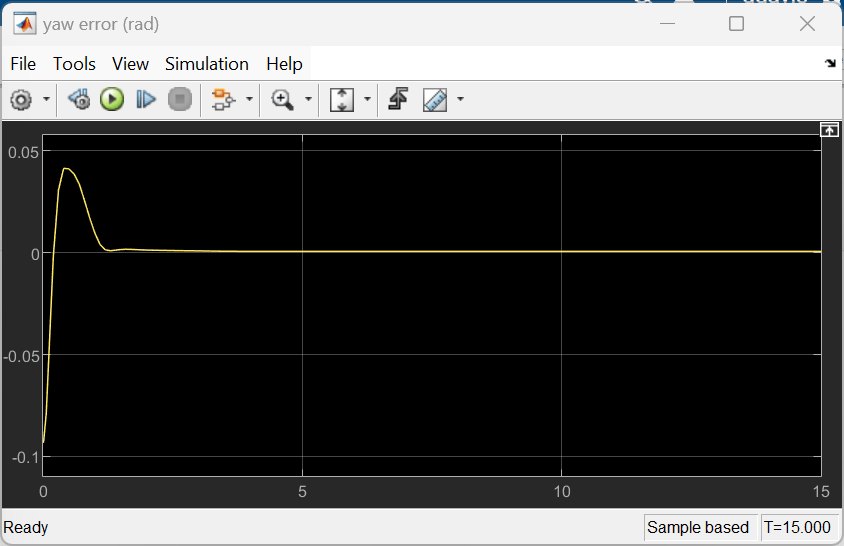

To check the performance of the trained agent within the Simulink model, simulate the model using the previously defined initial conditions (e1_initial = 0.2 and e2_initial = -0.1).

sim(mdl)

As shown below, the lateral error (top plot) and relative yaw angle (bottom plot) are both driven to zero. The vehicle starts with a lateral deviation from the centerline (0.2 m) and a nonzero yaw angle error (-0.1 rad). The lane-keeping controller makes the ego vehicle travel along the centerline after around two seconds. The steering angle (middle plot) shows that the controller reaches steady state after about two seconds.

Restore the random number stream using the information stored in previousRngState.

rng(previousRngState);

Local Functions

function in = localResetFcn(in) % Set random value for lateral deviation. in = setVariable(in,"e1_initial", 0.5*(-1+2*rand)); % Set random value for relative yaw angle. in = setVariable(in,"e2_initial", 0.1*(-1+2*rand)); end

See Also

Functions

train|sim|rlSimulinkEnv

Objects

rlDDPGAgent|rlDDPGAgentOptions|rlQValueFunction|rlContinuousDeterministicActor|rlTrainingOptions|rlSimulationOptions|rlOptimizerOptions

Blocks

Topics

- Imitate MPC Controller for Lane Keeping Assist

- Train DQN Agent for Lane Keeping Assist

- Compare DDPG Agent to LQR Controller

- Lane Keeping Assist System Using Model Predictive Control (Model Predictive Control Toolbox)

- Define Observation and Reward Signals in Custom Environments

- Deep Deterministic Policy Gradient (DDPG) Agent

- Train Reinforcement Learning Agents