rlMBPOAgent

Description

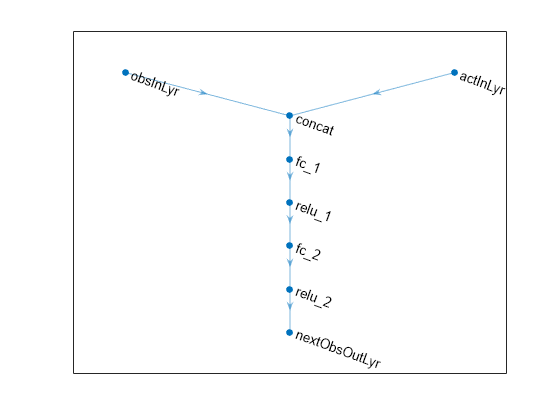

A model-based policy optimization (MBPO) agent is a model-based, off-policy, reinforcement learning method for environment with a discrete or continuous action space. An MBPO agent contains an internal model of the environment, which it uses to generate additional experiences without interacting with the environment. Specifically, during training, the MBPO agent generates real experiences by interacting with the environment. These experiences are used to train the internal environment model, which is used to generate additional experiences. The training algorithm then uses both the real and generated experiences to update the agent policy.

For more information on MBPO agents, see Model-Based Policy Optimization (MBPO) Agent. For more information on the different types of reinforcement learning agents, see Reinforcement Learning Agents.

Note

MBPO agents do not support recurrent networks or base SAC agents with a hybrid action space.

Creation

Description

agent = rlMBPOAgent(baseAgent,envModel)BaseAgent and EnvModel properties.

agent = rlMBPOAgent(___,agentOptions)AgentOptions property.

Properties

Object Functions

Examples

Version History

Introduced in R2022a