Ego Localization Using Lane Detections and HD Map for Scenario Generation

This example shows how to perform lane-level localization of the ego vehicle using lane detections, HD map data, and GPS data, and then generate a RoadRunner scenario.

Introduction

Using recorded vehicle data, you can generate virtual driving scenarios to recreate a real-world scenario. Virtual scenarios enable you to study and analyze these recorded scenarios. To generate a reliable virtual scenario, you must accurately localize the lateral and longitudinal positions of the ego vehicle. GPS sensor data can provide road-level localization, but it often suffers from the drift in the lateral or longitudinal position due to noise and bias. This example shows how to correct drift in ego positions by using lane detections, HD map data, and GPS data and get accurate lane-level localization of ego trajectory. Then, you generate a RoadRunner scenario using the estimated ego trajectory. If you also want to correct the ego orientation, see Ego Vehicle Localization Using GPS and IMU Fusion for Scenario Generation.

In this example, you:

Load and preprocess sensor data and lane detections.

Extract lanes information from an HD map.

Localize the ego vehicle.

Simulate a RoadRunner scenario.

Load Sensor Data and Lane Detections

This example requires the Scenario Builder for Automated Driving Toolbox™ support package. Check if the support package is installed and, if it is not installed, install it using the Get and Manage Add-Ons.

checkIfScenarioBuilderIsInstalled

Download the ZIP file containing a subset of sensor data from the Pandaset data, and then unzip the file. This subset contains a continuous sequence of 80 images and their GPS information.

dataFolder = tempdir; dataFilename = "PandasetSequence.zip"; url = "https://ssd.mathworks.com/supportfiles/driving/data/" + dataFilename; filePath = fullfile(dataFolder,dataFilename); if ~isfile(filePath) websave(filePath,url); end unzip(filePath,dataFolder); dataset = fullfile(dataFolder,"PandasetSequence");

Load the camera and GPS data into MATLAB® using the helperLoadData function. In this example, you use the camera data for visual validation of the generated scenario.

[cameraData,gpsData] = helperLoadData(dataset);

gpsData is a table with these columns:

timeStamp— Time, in seconds, at which the GPS data was collected.latitude— Latitude coordinate value of the ego vehicle. Units are in degrees.longitude— Longitude coordinate value of the ego vehicle. Units are in degrees.altitude— Altitude coordinate value of the ego vehicle. Units are in meters.

Display the first five entries of gpsData.

gpsData(1:5,:)

ans=5×4 table

timeStamp latitude longitude altitude

__________ ________ _________ ________

1.5576e+09 37.376 -122.06 41.409

1.5576e+09 37.376 -122.06 41.416

1.5576e+09 37.376 -122.06 41.421

1.5576e+09 37.376 -122.06 41.423

1.5576e+09 37.376 -122.06 41.428

This example uses recorded lane detections data. If you do not have recorded lane detections, you can extract lane information from recorded camera data. For more information, see Extract Lane Information from Recorded Camera Data for Scene Generation.

load("laneDetectionsPandaset.mat","laneDetections");

Display the first five entries of laneDetections.

laneDetections(1:5,:)

ans=5×2 table

timeStamp LaneBoundaryData

__________ ___________________________

1.5576e+09 {1×4 parabolicLaneBoundary}

1.5576e+09 {1×4 parabolicLaneBoundary}

1.5576e+09 {1×4 parabolicLaneBoundary}

1.5576e+09 {1×4 parabolicLaneBoundary}

1.5576e+09 {1×4 parabolicLaneBoundary}

Crop and Process Sensor Data

Crop the GPS and lane detection data relative to the GPS timestamp range by using the helperCropData function.

startTime = gpsData.timeStamp(1); endTime = gpsData.timeStamp(end); % Pack all the tables into a cell array. recordedData = {gpsData,laneDetections}; % Crop the data. recordedData = helperCropData(recordedData,startTime,endTime);

The timestamp values in the recorded data are in the POSIX® format, which Scenario Builder for Automated Driving Toolbox™ supports. Use the helperNormTimeInSecs function to normalize the timestamps using these arguments:

scale— Scale by which to convert the timestamp. Because the recorded timestamps are already in seconds, specify this argument as1.offset— Offset of the simulation start time. Specify the offset as the first timestamp ingpsData.

scale = 1; offset = startTime; recordedData = helperNormTimeInSecs(recordedData,offset,scale);

Extract the GPS data and lane detection data with updated timestamp values from recordedData.

gpsData = recordedData{1};

laneDetections = recordedData{2};Convert geographic GPS coordinates to local east-north-up (ENU) coordinates by using the latlon2local function. Specify an origin by using the latitude, longitude, and altitude values of the first entry in the GPS data. The transformed coordinates define the trajectory waypoints of the ego vehicle. Units are in meters.

geoReference = [gpsData.latitude(1) gpsData.longitude(1) gpsData.altitude(1)]; [xEast,yNorth,zUp] = latlon2local(gpsData.latitude,gpsData.longitude,gpsData.altitude,geoReference);

Create the ego trajectory using the waypoints and their corresponding times of arrival by using the waypointTrajectory (Navigation Toolbox) object.

egoTrajectory = waypointTrajectory([xEast yNorth zUp],gpsData.timeStamp);

Preprocess Lane Detections

Preprocess the lane detections data to convert it to a laneData object. Use a laneData object to efficiently store and read lane detection labels.

laneDetectionData = laneData(laneDetections.timeStamp,laneDetections.LaneBoundaryData);

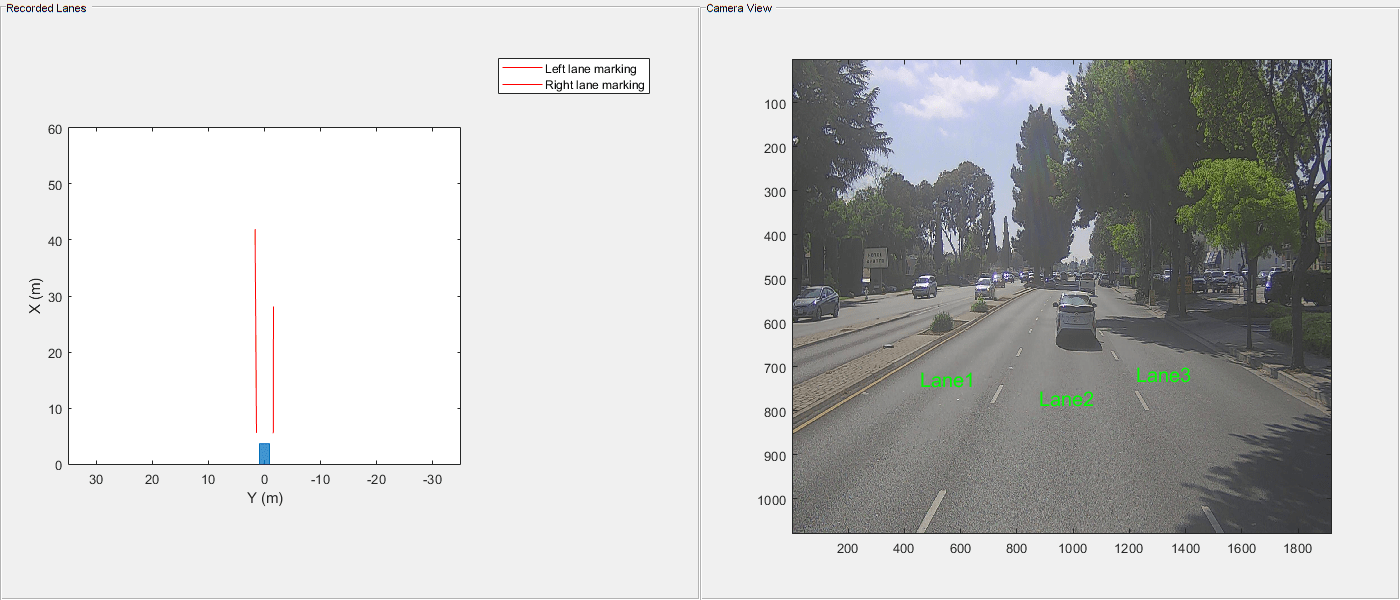

Visualize lane detections and camera images for the first and the last frame of the sequence using the birdsEyePlot function and helperPlotLanesWithCamera function.

currentFigure = figure(Position=[0 0 1400 600]); hPlot = axes(uipanel(currentFigure,Position=[0 0 0.5 1],Title="Recorded Lanes")); bep = birdsEyePlot(XLim=[0 60],YLim=[-35 35],Parent=hPlot); camPlot = axes(uipanel(currentFigure,Position=[0.5 0 0.5 1],Title="Camera View")); helperPlotLanesWithCamera(bep,camPlot,laneDetectionData,cameraData);

For ego localization, you need to know the ego vehicle offset from the ego lanes. Use the helperGetEgoLaneOffsets function to extract the offsets of the ego lane from the lane boundaries of the laneData object.

egoLaneData = helperGetEgoLaneOffsets(laneDetectionData);

egoLaneData is a table with these columns:

timeStamp— Time, in seconds, at which the lanes were detected.egoLeftOffset— Lateral offset of the ego vehicle position from the left lane boundary at each timestamp. Units are in meters.egoRightOffset— Lateral offset of the ego vehicle position from the right lane boundary at each timestamp. Units are in meters.

Display the first five entries of egoLaneData.

egoLaneData(1:5,:)

ans=5×3 table

timeStamp egoLeftOffset egoRightOffset

_________ _____________ ______________

0 1.3751 -1.5132

0.10004 1.3654 -1.5825

0.2001 1.385 -1.562

0.30017 1.385 -1.4658

0.40026 1.4242 -1.5543

Specify the lane index for the first waypoint of the ego vehicle on the basis of visual inspection of camera data. You must start numbering the lanes in left-to-right order along the travel direction of the ego vehicle. For this example, the first waypoint of the ego vehicle is on the second lane.

firstEgoWaypointLaneIdx = 2;

For ego localization, you need to know the lane index at every time stamp. Use the helperGetEgoWayPointLaneIndex function to get the lane index of the ego vehicle.

egoLaneData = helperGetEgoWayPointLaneIndex(egoLaneData,firstEgoWaypointLaneIdx);

Display the first five entries of egoLaneData. Notice that the table includes the currentLane column, which specifies the lane index of the ego vehicle at each timestamp.

egoLaneData(1:5,:)

ans=5×4 table

timeStamp egoLeftOffset egoRightOffset currentLane

_________ _____________ ______________ ___________

0 1.3751 -1.5132 2

0.10004 1.3654 -1.5825 2

0.2001 1.385 -1.562 2

0.30017 1.385 -1.4658 2

0.40026 1.4242 -1.5543 2

Extract Lane Boundaries from HD Map

To localize the vehicle on the HD map, you also need to obtain the lane information from the HD map. In this example, you use a RoadRunner scene with the HD map information and then extract the lane information from the RoadRunner scene. For information on how to generate RoadRunner scene from recorded sensor data, see Generate RoadRunner Scene Using Labeled Camera Images and Raw Lidar Data.

Specify the path to your local RoadRunner installation folder. This code shows the path for the default installation location on Windows®.

rrAppPath = "C:\Program Files\RoadRunner R2023a\bin\win64";Specify the path to your RoadRunner project. This code shows the path to a sample project folder on Windows.

rrProjectPath = "C:\RR\MyProjects";Open RoadRunner using the specified path to your project.

rrApp = roadrunner(rrProjectPath,InstallationFolder=rrAppPath);

This example provides a RoadRunner scene, which is compatible with the Pandaset sequence used in this example. Copy the scene to the RoadRunner project and open the scene. To learn more about the RoadRunner environment, see RoadRunner Project and Scene System (RoadRunner).

copyfile("pandasetSequence.rrscene",fullfile(rrProjectPath,"Scenes")); openScene(rrApp,"pandasetSequence.rrscene");

To extract the lane boundary information from the RoadRunner scene, you need a RoadRunner scenario first. Create a new RoadRunner scenario.

newScenario(rrApp);

RoadRunner Scenario can import actor trajectories using CSV files. Write the extracted raw GPS trajectory to a CSV file using the helperWriteRRCSVTrajectory function.

helperWriteRRCSVTrajectory(gpsData.timeStamp,[xEast,yNorth,zUp],"gpsTrajectory.csv");Import the GPS trajectory from the CSV file. Change the color of the vehicle to visually differentiate it from other vehicles. Select the vehicle in the scenario editing canvas. In the Attributes pane, click the Color box and select the red color patch.

format = "CSV Trajectory"; filename= fullfile(pwd,"gpsTrajectory.csv"); importScenario(rrApp,filename,format);

Create a scenario simulation object for the current RoadRunner scenario using the createSimulation function. The simulation object enables you to programmatically interact with the RoadRunner scenario simulation using MATLAB®.

rrSim = createSimulation(rrApp);

Use the helperGetLaneInfo function to read the lane boundary information from RoadRunner.

[lanes,laneBoundaries] = helperGetLaneInfo(rrSim);

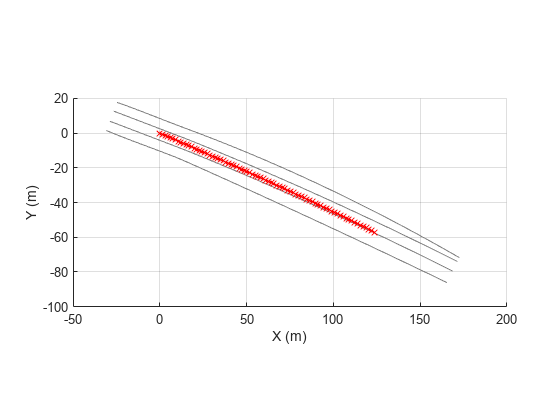

Plot the ego trajectory from GPS on the extracted scene information using the helperDisplayPathWithScene function.

axisEgo = helperDisplayPathWithScene(laneBoundaries,[xEast yNorth]);

Localize Ego Vehicle

Use the helperLocalizeEgo function to localize the ego trajectory based on lane detections and lane boundaries from HD map. The function enables you to correct the ego vehicle trajectory such that the lane number and offset calculated from the HD map match the lane number and offset calculated from lane detections.

correctedEgoPositions = helperLocalizeEgo(egoLaneData,egoTrajectory,lanes,laneBoundaries);

Write the corrected ego trajectory to a CSV file using the helperWriteRRCSVTrajectory function.

helperWriteRRCSVTrajectory(gpsData.timeStamp,correctedEgoPositions,"localizedTrajectory.csv");Plot the GPS trajectory and corrected ego trajectory data.

plot(axisEgo,correctedEgoPositions(:,1),correctedEgoPositions(:,2),"b-x");

Simulate RoadRunner Scenario

Import the corrected ego trajectory from the corresponding CSV file. Change the color of the vehicle to visually differentiate it from the previously imported vehicle. Select the vehicle in the scenario editing canvas. In the Attributes pane, click the Color box and select the blue color patch.

format = "CSV Trajectory"; filename= fullfile(pwd,"localizedTrajectory.csv"); importScenario(rrApp,filename,format);

Define the simulation parameters of RoadRunner Scenario. Specify the maximum simulation time using the last timestamp of the GPS data. To plot the simulation results, enable data logging.

endTime = gpsData.timeStamp(end);

set(rrSim,MaxSimulationTime=endTime);

set(rrSim,StepSize=0.1);

set(rrSim,Logging="on");Run the simulation. Monitor the status of the simulation and wait for the simulation to complete. Because RoadRunner Scenario cannot remove actors after their exit times, scenario simulation can fail due to collision. To avoid stopping the scenario on collision, remove fail conditions using these steps:

In the Logic editor, click the condition node at the end of the scenario.

In the Attributes pane, click Remove Fail Condition.

set(rrSim,SimulationCommand="Start") while strcmp(get(rrSim,"SimulationStatus"),"Running") simstatus = get(rrSim,"SimulationStatus"); pause(1) end

To view the scenario from the ego vehicle view or chase view, in the Simulation pane, in the Camera section, set Camera View to either Follow or Front. Then, set the Actor attribute to vehicle2, which follows the localized trajectory in the scenario.

This figure shows the recorded camera data and generated RoadRunner scenario. The red car in the RoadRunner scenario follows the raw GPS trajectory, which shows some drift. The blue car corrects the drift by following the trajectory generated using lane detections and HD map data.

References

[1] Hesai and Scale. PandaSet. Accessed September 18, 2025. https://pandaset.org/. The PandaSet data set is provided under the CC-BY-4.0 license.