ultrasonicDetectionGenerator

Generate ultrasonic range detections in driving scenario or RoadRunner Scenario

Since R2022a

Description

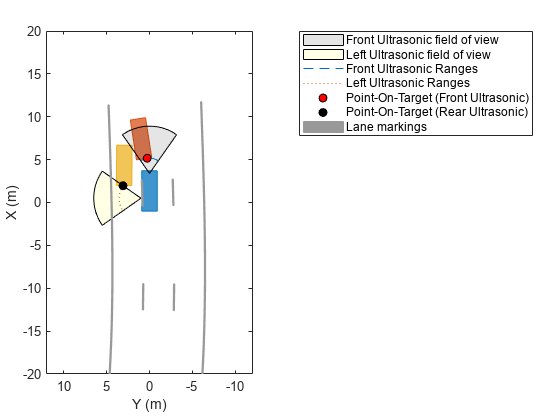

The ultrasonicDetectionGenerator

System object™ generates detections from an ultrasonic sensor mounted on an ego vehicle. The

detections are range measurements that indicate the distance between the sensor and the

closest point of the detected object. You can use an ultrasonicDetectionGenerator object in a scenario containing actors and

trajectories, which you can create by using a drivingScenario object.

You can also use the ultrasonicDetectionGenerator object with vehicle actors in RoadRunner Scenario simulation. First you must create a SensorSimulation object to interface sensors with RoadRunner Scenario, and then register the sensor model using the addSensors object function before simulation.

To generate ultrasonic detections:

Create the

ultrasonicDetectionGeneratorobject and set its properties.Call the object with arguments, as if it were a function.

To learn more about how System objects work, see What Are System Objects?

Creation

Syntax

Description

ultrasonic = ultrasonicDetectionGeneratorultrasonicDetectionGenerator object with

default property values to generate range detections for a simulated ultrasonic

sensor.

ultrasonic = ultrasonicDetectionGenerator(id)SensorIndex property to id.

ultrasonic = ultrasonicDetectionGenerator(___,Name=Value)ultrasonicDetectionGenerator(MountingLocation=[1 0 0.5],MountingAngles=[0 0

pi/20]) specifies the mounting location and angle of the ultrasonic sensor on

the ego vehicle.

Properties

Usage

Description

[

also returns a logical value, dets,isValidTime] = ultrasonic(targets,simTime)isValidTime, indicating whether

simTime is a valid time for generating detections. If

simTime is an integer multiple of the reciprocal of the UpdateRate property value, then isValidTime is

1 (true).

Input Arguments

Output Arguments

Object Functions

To use an object function, specify the

System object as the first input argument. For

example, to release system resources of a System object named obj, use

this syntax:

release(obj)

Examples

Version History

Introduced in R2022a

See Also

Objects

objectDetection|drivingScenario|drivingRadarDataGenerator|visionDetectionGenerator|lidarPointCloudGenerator|insSensor