squeezenet

(Not recommended) SqueezeNet convolutional neural network

squeezenet is not recommended. Use the imagePretrainedNetwork function instead. For more information, see Version

History.

Description

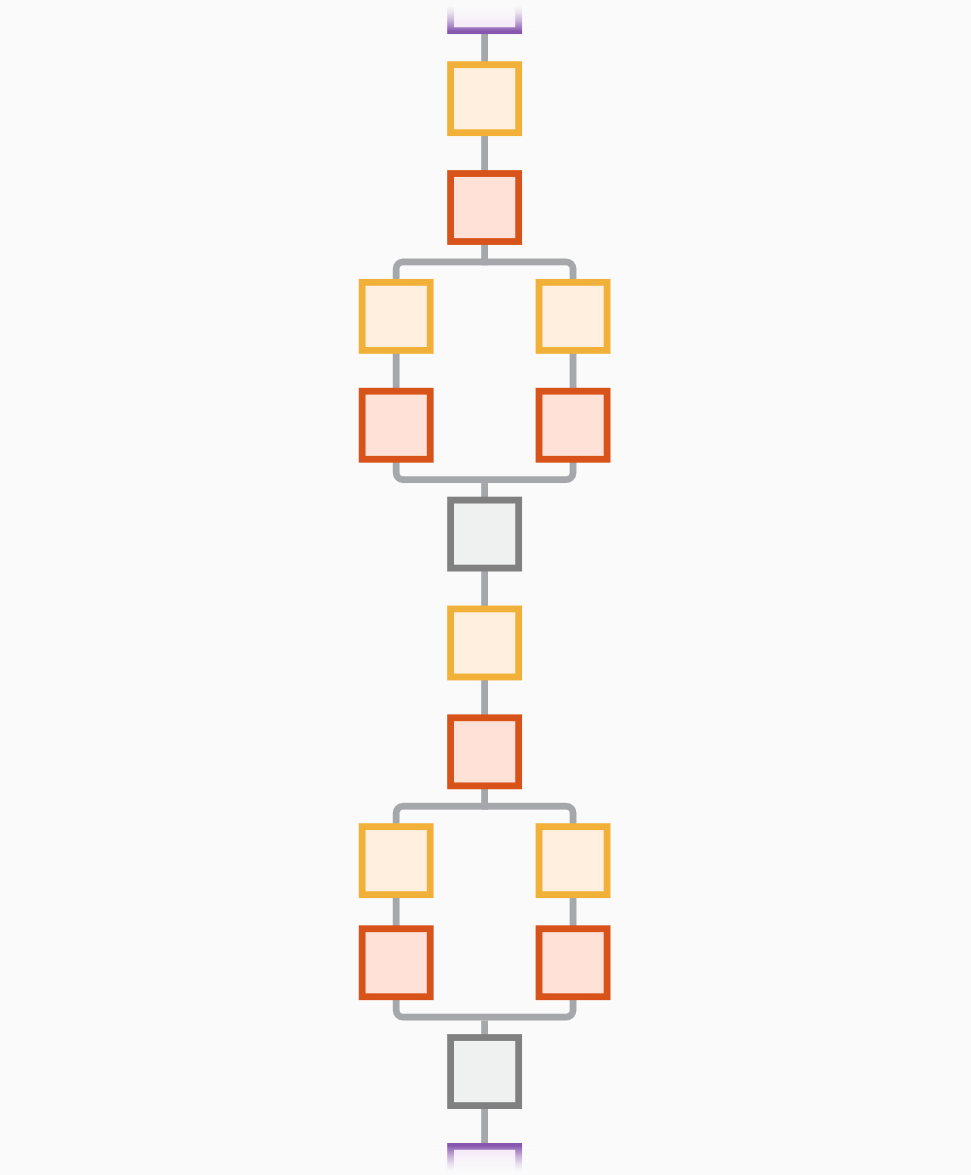

SqueezeNet is a convolutional neural network that is 18 layers deep. You can load a pretrained version of the network trained on more than a million images from the ImageNet database [1]. The pretrained network can classify images into 1000 object categories, such as keyboard, mouse, pencil, and many animals. As a result, the network has learned rich feature representations for a wide range of images. This function returns a SqueezeNet v1.1 network, which has similar accuracy to SqueezeNet v1.0 but requires fewer floating-point operations per prediction [3]. The network has an image input size of 227-by-227. For more pretrained networks in MATLAB®, see Pretrained Deep Neural Networks.

net = squeezenet('Weights','imagenet')net = squeezenet.

lgraph = squeezenet('Weights','none')

Examples

Output Arguments

References

[1] ImageNet. http://www.image-net.org.

[2] Iandola, Forrest N., Song Han, Matthew W. Moskewicz, Khalid Ashraf, William J. Dally, and Kurt Keutzer. “SqueezeNet: AlexNet-Level Accuracy with 50x Fewer Parameters and <0.5MB Model Size.” Preprint, submitted November 4, 2016. https://arxiv.org/abs/1602.07360.

[3] Iandola, Forrest N. "SqueezeNet." https://github.com/forresti/SqueezeNet.