googlenet

(Not recommended) GoogLeNet convolutional neural network

googlenet is not recommended. Use the imagePretrainedNetwork function instead and specify the

"googlenet" model. For more information, see Version History.

Description

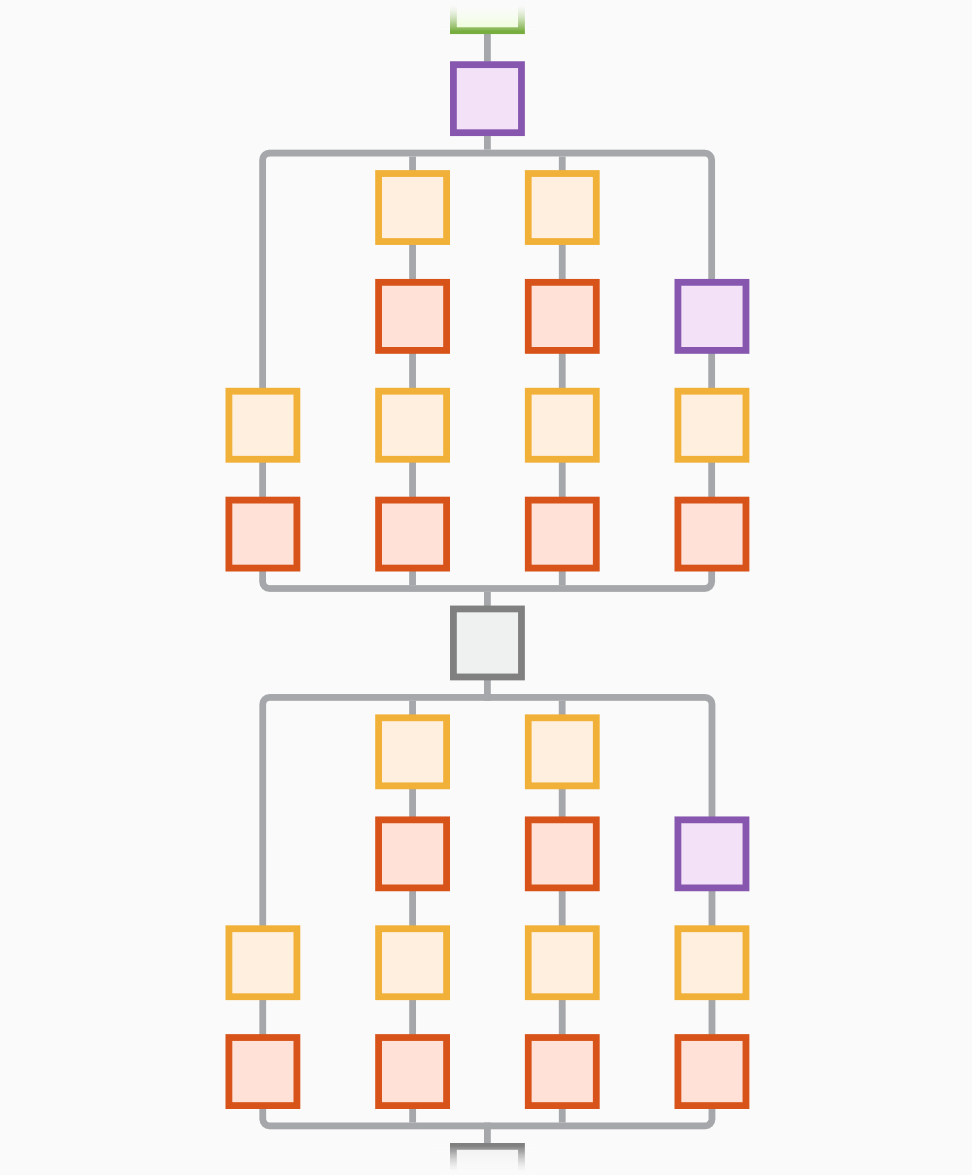

GoogLeNet is a convolutional neural network that is 22 layers deep. You can load a pretrained version of the network trained on either the ImageNet [1] or Places365 [2] [3] data sets. The network trained on ImageNet classifies images into 1000 object categories, such as keyboard, mouse, pencil, and many animals. The network trained on Places365 is similar to the network trained on ImageNet, but classifies images into 365 different place categories, such as field, park, runway, and lobby. These networks have learned different feature representations for a wide range of images. The pretrained networks both have an image input size of 224-by-224. For more pretrained networks in MATLAB®, see Pretrained Deep Neural Networks.

net = googlenet

This function requires the Deep Learning Toolbox™ Model for GoogLeNet Network support package. If this support package is not installed, then the function provides a download link.

net = googlenet('Weights',weights)googlenet('Weights','imagenet') (default) is equivalent to

googlenet.

The network trained on ImageNet requires the Deep Learning Toolbox Model for GoogLeNet Network support package. The network trained on Places365 requires the Deep Learning Toolbox Model for Places365-GoogLeNet Network support package. If the required support package is not installed, then the function provides a download link.

lgraph = googlenet('Weights','none')

Examples

Input Arguments

Output Arguments

References

[1] ImageNet. http://www.image-net.org.

[2] Zhou, Bolei, Aditya Khosla, Agata Lapedriza, Antonio Torralba, and Aude Oliva. "Places: An image database for deep scene understanding." arXiv preprint arXiv:1610.02055 (2016).

[3] Places. http://places2.csail.mit.edu/

[4] Szegedy, Christian, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. “Going Deeper with Convolutions.” In 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 1–9. Boston, MA, USA: IEEE, 2015. https://doi.org/10.1109/CVPR.2015.7298594.

[5] BVLC GoogLeNet Model. https://github.com/BVLC/caffe/tree/master/models/bvlc_googlenet

Extended Capabilities

Version History

Introduced in R2017bSee Also

imagePretrainedNetwork | dlnetwork | trainingOptions | trainnet | Deep Network Designer