bisenetv2

Create BiSeNet v2 convolutional neural network for semantic segmentation

Since R2025a

Syntax

Description

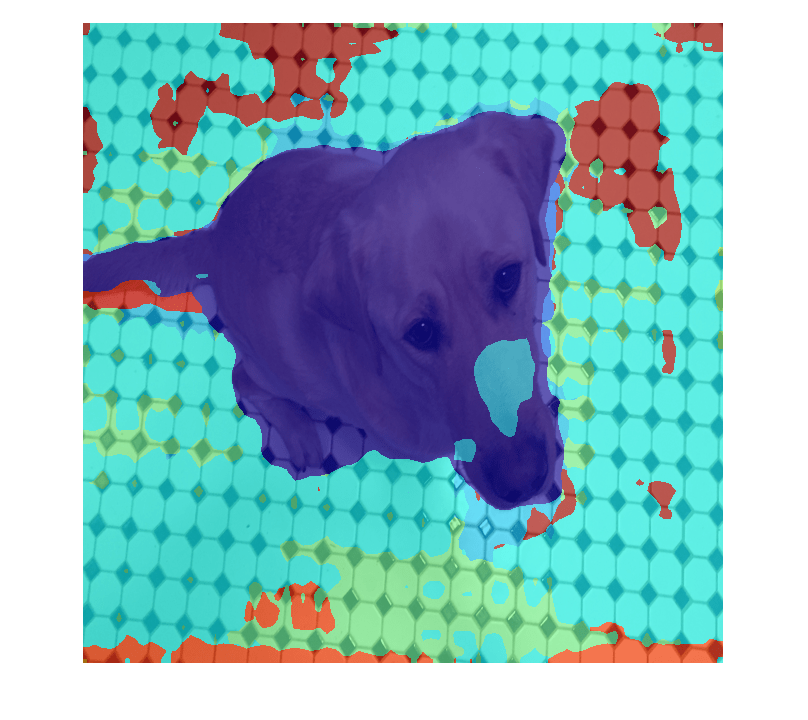

Use the bisenetv2 function to semantically segment images

using the BiSeNet v2 convolutional neural network. Using the pretrained network, trained on

171 image classes of the COCO-Stuff data set [2], you can perform inference

on test images which contain these classes.

To perform semantic segmentation on a custom data set,

you must train the network on your data set using the trainnet (Deep Learning Toolbox) function.

Note

This functionality requires Deep Learning Toolbox™ and the Computer Vision Toolbox™ Model for BiSeNet v2 Semantic Segmentation Network. You can install the Computer Vision Toolbox Model for BiSeNet v2 Semantic Segmentation Network from Add-On Explorer. For more information about installing add-ons, see Get and Manage Add-Ons.

net = bisenetv2(imageSize,numClasses)imageSize and the specified number of classes

numClasses. Train this network on a custom data set using the using

the trainnet (Deep Learning Toolbox)

function.

[

specifies options using one or more name-value arguments in addition to the input arguments

from the previous syntax. When you specify one or more network options using a name-value

argument, you create a custom BiSeNet v2 network with uninitialized weights.net] = bisenetv2(imageSize,numClasses,Name=Value)

For example, bisenetv2(ChannelRatio=16) specifies the channel ratio

between the semantic branch and detail branch as 16.

Examples

Input Arguments

Name-Value Arguments

Output Arguments

More About

References

[1] Yu, Changqian, Changxin Gao, Jingbo Wang, Gang Yu, Chunhua Shen, and Nong Sang. “BiSeNet V2: Bilateral Network with Guided Aggregation for Real-Time Semantic Segmentation.” International Journal of Computer Vision 129, no. 11 (November 2021): 3051–68. https://doi.org/10.1007/s11263-021-01515-2.

[2] Caesar, Holger, Jasper Uijlings, and Vittorio Ferrari. “COCO-Stuff: Thing and Stuff Classes in Context.” In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1209–18. Salt Lake City, UT, USA: IEEE, 2018. https://doi.org/10.1109/CVPR.2018.00132.

Version History

Introduced in R2025a

See Also

Objects

dlnetwork(Deep Learning Toolbox)

Functions

trainnet(Deep Learning Toolbox) |deeplabv3plus|unet|unet3d|pretrainedEncoderNetwork|semanticseg|evaluateSemanticSegmentation