lsqminnorm

Minimum norm least-squares solution to linear equation

Syntax

Description

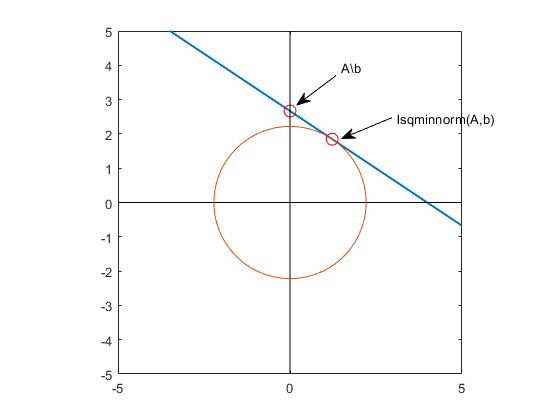

X = lsqminnorm(

returns an array A,B)X that solves the linear equation AX =

B and minimizes the value of norm(A*X-B). If

several solutions exist, then lsqminnorm returns the solution

that minimizes norm(X). If B has multiple

columns, then the previous statements are true for each column of

X and B, respectively.

X = lsqminnorm(___,

specifies whether to display a warning if rankWarn)A has low rank. You can

specify this option in addition to any of the input argument combinations in

previous syntaxes. rankWarn can be "nowarn"

(default) or "warn".

Examples

Input Arguments

Tips

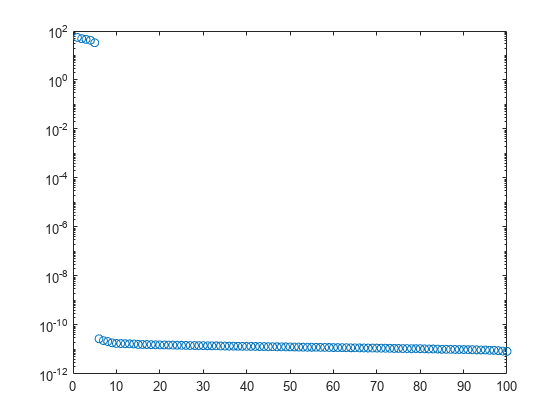

The minimum-norm solution computed by

lsqminnormis of particular interest when several solutions exist. The equation Ax = b has many solutions wheneverAis underdetermined (fewer rows than columns) or of low rank.lsqminnorm(A,B,tol)is typically more efficient thanpinv(A,tol)*Bfor computing minimum norm least-squares solutions to linear systems.lsqminnormuses the complete orthogonal decomposition (COD) to find a low-rank approximation ofA, whilepinvuses the singular value decomposition (SVD). Therefore, the results ofpinvandlsqminnormdo not match exactly.For sparse matrices,

lsqminnormuses a different algorithm than for dense matrices, and therefore can produce different results.