Train Network on Amazon Web Services Using MATLAB Deep Learning Container

This example shows how to train a deep learning network in the cloud using MATLAB® on an Amazon EC2® instance.

This workflow helps you speed up your deep learning applications by training neural networks in the MATLAB Deep Learning Container on the cloud. Using MATLAB in the cloud allows you to choose machines where you can take full advantage of high-performance NVIDIA® GPUs. You can access the MATLAB Deep Learning Container remotely using a web browser or a VNC connection. Then you can run MATLAB desktop in the cloud on an Amazon EC2 GPU-enabled instance to benefit from the computing resources available.

To start training a deep learning model on AWS® using the MATLAB Deep Learning Container, you must:

Check the requirements to use the MATLAB Deep Learning Container on Docker Hub.

Ensure that you have an AWS account.

Launch the Docker host instance.

Pull and run the container.

Run MATLAB in the container.

You can test the container using the deep learning example, Create Simple Deep Learning Neural Network for Classification, included in the default folder of the container.

Semantic Segmentation in the Cloud

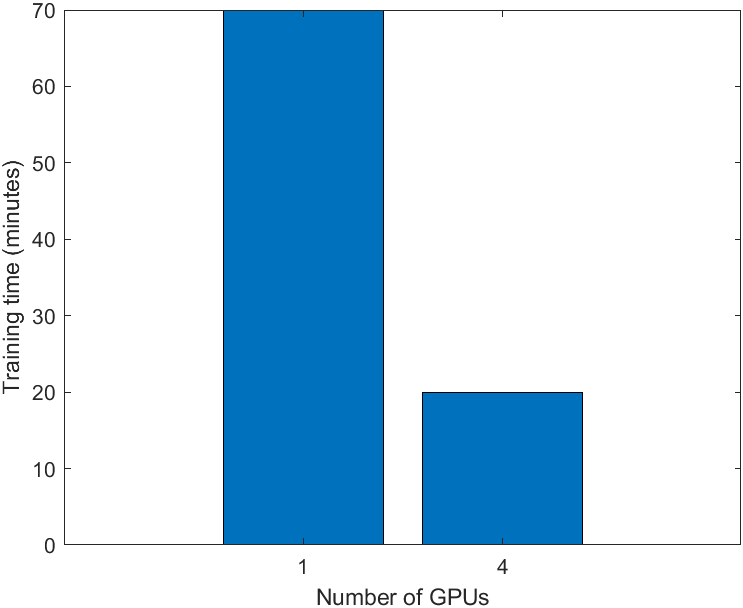

This example shows how to train a semantic segmentation network using a single GPU and then using 4 GPUs on a p3.8xlarge Amazon EC2 instance using the MATLAB Deep Learning Container cloud workflow. The p3.8xlarge instance has 4 NVIDIA Tesla® V100 SMX2 GPUs with a total of 64 GB of GPU memory. Using 4 GPUs speeds up the training by about a factor of 3 compared to a single GPU.

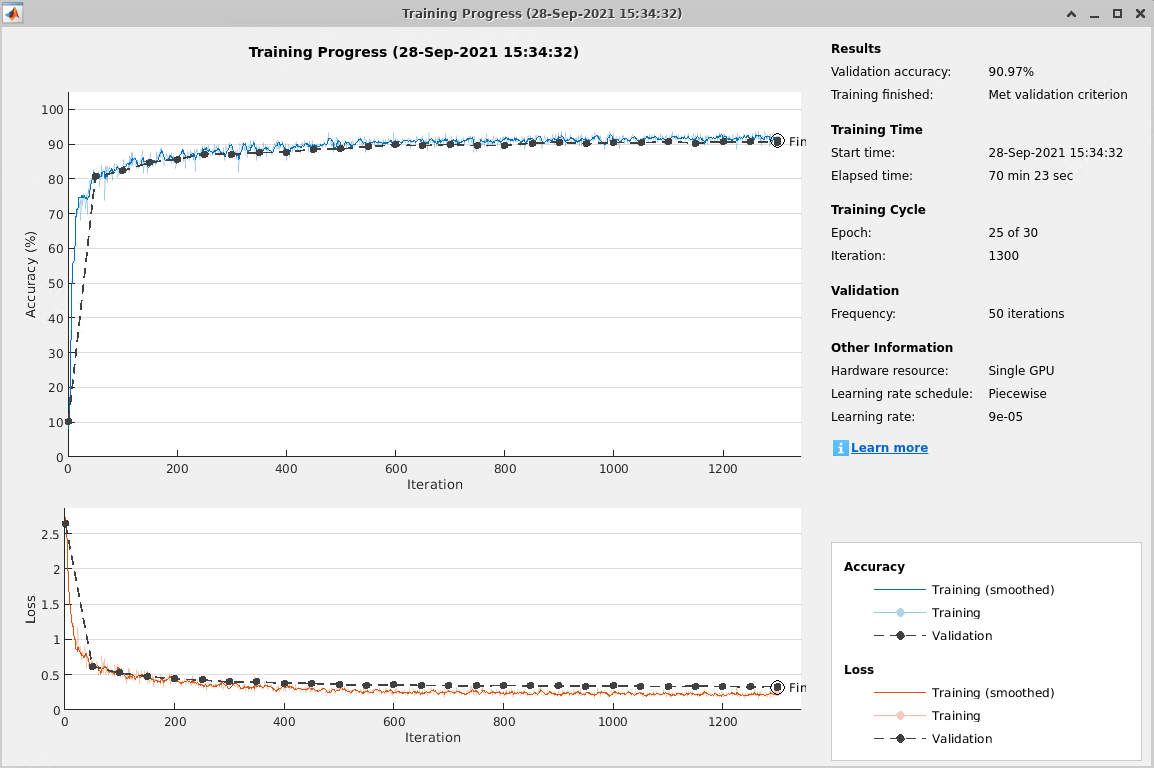

Open the live script from the Semantic Segmentation Using Deep Learning example and run through the sections. This example in the live script shows training if you choose a single NVIDIA Tesla V100 SMX2 GPU on a p3.8xlarge EC2 instance. If you do not have this instance in your region, pick another multi-GPU enabled instance. Note that to train the semantic segmentation network using the Live Script example, change doTraining to true. To visualize the loss over different epochs during training, set Plots to "training-progress" in the training options. Training took around 45 minutes to meet the validation criterion, as shown in the training progress plot.

Semantic Segmentation in the Cloud with Multiple GPUs

Train the network on a machine with multiple GPUs to improve performance.

When you train with multiple GPUs, each image batch is distributed between the GPUs. Distribution between GPUs effectively increases the total GPU memory available, allowing larger batch sizes. A recommended practice is to scale up the mini-batch size linearly with the number of GPUs to keep the workload on each GPU constant. Because increasing the mini-batch size improves the significance of each iteration, also increase the initial learning rate by an equivalent factor.

For example, to run this training on a machine with 4 GPUs:

In the semantic segmentation example, set

ExecutionEnvironmentto"multi-gpu"in thetrainingOptionsfunction.Multiply the mini-batch size by 4 to match the number of GPUs.

Multiply the initial learning rate by 4 to match the number of GPUs.

The following training progress plot shows the improvement in performance when you use multiple GPUs. The results show the semantic segmentation network trained on 4 NVIDIA Tesla V100 SMX2 GPUs with a total of 64 GB of GPU memory. The example used the multi-gpu training option with the mini-batch size and initial learning rate scaled by a factor of 4. This network trained for 18 epochs in around 20 minutes.

As shown in the following plot, using 4 GPUs and adjusting the training options as described above results in a network that has the same validation accuracy but trains 2.7x faster.