Code Generation for Lidar Object Detection Using SqueezeSegV2 Network

This example shows how to generate CUDA® MEX code for a lidar object detection network. In the example, you first segment the point cloud with a pretrained network, then cluster the points and fit 3-D bounding boxes to each cluster. Finally, you generate MEX code for the network.

The lidar object detection network in this example is a SqueezeSegV2 network pretrained on the PandaSet data set, which contains 8240 unorganized lidar point cloud scans of various city scenes captured using a Pandar64 sensor. The network can segment 12 different classes and fit bounding boxes to objects in the car class.

Third-Party Prerequisites

Required

CUDA enabled NVIDIA® GPU and compatible driver

Optional

For non-MEX builds such as static libraries, dynamic libraries, or executables, this example has the following additional requirements.

NVIDIA toolkit

NVIDIA cuDNN library

Environment variables for the compilers and libraries. For more information, see Third-Party Hardware (GPU Coder) and Setting Up the Prerequisite Products (GPU Coder).

Verify GPU Environment

To verify if the compilers and libraries for running this example are set up correctly, use the coder.checkGpuInstall (GPU Coder) function.

envCfg = coder.gpuEnvConfig("host"); envCfg.DeepLibTarget = "cudnn"; envCfg.DeepCodegen = 1; envCfg.Quiet = 1; coder.checkGpuInstall(envCfg);

Load SqueezeSegV2 Network and Entry-Point Function

Use the getSqueezeSegV2PandasetNet function, attached to this example as a supporting file, to load the pretrained SqueezeSegV2 network. For more information on how to train this network, see Lidar Point Cloud Semantic Segmentation Using SqueezeSegV2 Deep Learning Network (Lidar Toolbox).

net = getSqueezeSegV2PandasetNet;

The pretrained network is a dlnetwork. To display an interactive visualization of the network architecture, use the analyzeNetwork function.

The segmentClusterDetect entry-point function takes in the organized point cloud matrix as an input and passes it to a trained network to segment, cluster and detect the bounding boxes. The segmentClusterDetect function loads the network object into a persistent variable and reuses the persistent object for subsequent prediction calls.

type('segmentClusterDetect.m');function [op,bboxes] = segmentClusterDetect(I)

% Entry point function to segment, cluster, and fit 3-D boxes.

%#codegen

persistent net;

if isempty(net)

net = coder.loadDeepLearningNetwork('trainedSqueezesegV2NetPandaset.mat');

end

% Pass input.

predictedResult = predict(net,dlarray(I,'SSCB'));

[~,op] = max(extractdata(predictedResult),[],3);

% Get the indices of points for the required class.

carIdx = (op == 7);

% Select the points of the required class and cluster them based on distance.

ptCldMod = select(pointCloud(I(:,:,1:3)),carIdx);

[labels,numClusters] = pcsegdist(ptCldMod,0.5);

% Select each cluster and fit a cuboid to each cluster.

bboxes = zeros(0,9);

for num = 1:numClusters

labelIdx = (labels == num);

% Ignore any cluster with less than 150 points.

if sum(labelIdx,'all') < 150

continue;

end

pcSeg = select(ptCldMod,labelIdx);

mdl = pcfitcuboid(pcSeg);

bboxes = [bboxes;mdl.Parameters];

end

end

Execute Entry-Point Function

Read the point cloud and convert it to organized format using pcorganize (Lidar Toolbox) function. For more details on unorganized to organized point cloud conversion, see the Unorganized to Organized Conversion of Point Clouds Using Spherical Projection (Lidar Toolbox) example.

ptCloudIn = pcread("pandasetDrivingData.pcd"); vbeamAngles = [15.0000 11.0000 8.0000 5.0000 3.0000 2.0000 1.8333 1.6667 1.5000 1.3333 1.1667 1.0000 0.8333 0.6667 ... 0.5000 0.3333 0.1667 0 -0.1667 -0.3333 -0.5000 -0.6667 -0.8333 -1.0000 -1.1667 -1.3333 -1.5000 -1.6667 ... -1.8333 -2.0000 -2.1667 -2.3333 -2.5000 -2.6667 -2.8333 -3.0000 -3.1667 -3.3333 -3.5000 -3.6667 -3.8333 -4.0000 ... -4.1667 -4.3333 -4.5000 -4.6667 -4.8333 -5.0000 -5.1667 -5.3333 -5.5000 -5.6667 -5.8333 -6.0000 -7.0000 -8.0000 ... -9.0000 -10.0000 -11.0000 -12.0000 -13.0000 -14.0000 -19.0000 -25.0000]; hResolution = 1856; params = lidarParameters(vbeamAngles,hResolution); ptCloudOrg = pcorganize(ptCloudIn,params);

Convert the organized point cloud to a 5-channel input image using the helperPointCloudToImage function, attached to the example as a supporting file.

I = helperPointCloudToImage(ptCloudOrg);

Use the segmentClusterDetect entry-point function to get the predicted bounding boxes for cars and the segmentation labels.

[op,bboxes] = segmentClusterDetect(I);

Get the location and color map of the output.

cmap = helperLidarColorMap; colormap = cmap(op,:); loc = reshape(I(:,:,1:3),[],3);

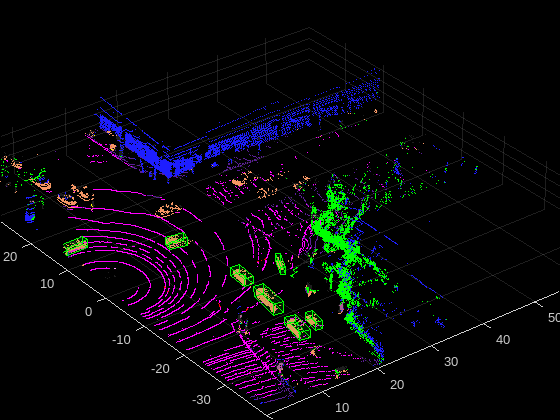

Display the point cloud with segmentation output and bounding boxes.

figure ax = pcshow(loc,colormap); showShape("cuboid",bboxes,Parent=ax,Opacity=0.1,... Color="green",LineWidth=0.5); zoom(ax,2);

Generate CUDA MEX Code

To generate CUDA code for the segmentClusterDetect entry-point function, create a GPU code configuration object for a MEX target and set the target language to C++. Use the coder.DeepLearningConfig (GPU Coder) function to create a CuDNN deep learning configuration object and assign it to the DeepLearningConfig property of the GPU code configuration object.

cfg = coder.gpuConfig('mex'); cfg.TargetLang = 'C++'; cfg.DeepLearningConfig = coder.DeepLearningConfig(TargetLibrary='cudnn'); args = {randn(64,1856,5,'single')}; codegen -config cfg segmentClusterDetect -args args -report

Code generation successful: View report

To generate CUDA code for the TensorRT target, create and use a TensorRT deep learning configuration object instead of the CuDNN configuration object.

Run Generated MEX Code

Call the generated CUDA MEX code on the 5-channel image I, created from ptCloudIn.

[op,bboxes] = segmentClusterDetect_mex(I);

Get the color map of the output.

colormap = cmap(op,:);

Display the output.

figure ax1 = pcshow(loc,colormap); showShape("cuboid",bboxes,Parent=ax1,Opacity=0.1,... Color="green",LineWidth=0.5); zoom(ax1,2);

Supporting Functions

Define Lidar Color Map

The helperLidarColorMap function defines the colormap used by the lidar dataset.

function cmap = helperLidarColorMap % Lidar color map for the pandaset classes cmap = [[30 30 30]; % UnClassified [0 255 0]; % Vegetation [255 150 255]; % Ground [255 0 255]; % Road [255 0 0]; % Road Markings [90 30 150]; % Side Walk [245 150 100]; % Car [250 80 100]; % Truck [150 60 30]; % Other Vehicle [255 255 0]; % Pedestrian [0 200 255]; % Road Barriers [170 100 150]; % Signs [30 30 255]]; % Building cmap = cmap./255; end

References

[1] Wu, Bichen, Xuanyu Zhou, Sicheng Zhao, Xiangyu Yue, and Kurt Keutzer. “SqueezeSegV2: Improved Model Structure and Unsupervised Domain Adaptation for Road-Object Segmentation from a LiDAR Point Cloud.” In 2019 International Conference on Robotics and Automation (ICRA), 4376–82. Montreal, QC, Canada: IEEE, 2019. https://doi.org/10.1109/ICRA.2019.8793495.

[2] Hesai and Scale. PandaSet. Accessed September 18, 2025. https://pandaset.org/. The PandaSet data set is provided under the CC-BY-4.0 license.

[3] Xiao, Pengchuan, Zhenlei Shao, Steven Hao, et al. “PandaSet: Advanced Sensor Suite Dataset for Autonomous Driving.” 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), IEEE, September 19, 2021, 3095–101. https://doi.org/10.1109/ITSC48978.2021.9565009.