Ensemble MCMC sampler

Cascaded affine invariant ensemble MCMC sampler. "The MCMC hammer"

gwmcmc is an implementation of the Goodman and Weare 2010 Affine

invariant ensemble Markov Chain Monte Carlo (MCMC) sampler. MCMC sampling

enables bayesian inference. The problem with many traditional MCMC samplers

is that they can have slow convergence for badly scaled problems, and that

it is difficult to optimize the random walk for high-dimensional problems.

This is where the GW-algorithm really excels as it is affine invariant. It

can achieve much better convergence on badly scaled problems. It is much

simpler to get to work straight out of the box, and for that reason it

truly deserves to be called the MCMC hammer.

(This code uses a cascaded variant of the Goodman and Weare algorithm).

USAGE:

[models,logP]=gwmcmc(minit,logPfuns,mccount,[Parameter,Value,Parameter,Value]);

INPUTS:

minit: an MxW matrix of initial values for each of the walkers in the

ensemble. (M:number of model params. W: number of walkers). W

should be atleast 2xM. (see e.g. mvnrnd).

logPfuns: a cell of function handles returning the log probality of a

proposed set of model parameters. Typically this cell will

contain two function handles: one to the logprior and another

to the loglikelihood. E.g. {@(m)logprior(m) @(m)loglike(m)}

mccount: What is the desired total number of monte carlo proposals.

This is the total number, -NOT the number per chain.

Named Parameter-Value pairs:

'StepSize': unit-less stepsize (default=2.5).

'ThinChain': Thin all the chains by only storing every N'th step (default=10)

'ProgressBar': Show a text progress bar (default=true)

'Parallel': Run in ensemble of walkers in parallel. (default=false)

OUTPUTS:

models: A MxWxT matrix with the thinned markov chains (with T samples

per walker). T=~mccount/p.ThinChain/W.

logP: A PxWxT matrix of log probabilities for each model in the

models. here P is the number of functions in logPfuns.

Note on cascaded evaluation of log probabilities:

The logPfuns-argument can be specifed as a cell-array to allow a cascaded

evaluation of the probabilities. The computationally cheapest function should be

placed first in the cell (this will typically the prior). This allows the

routine to avoid calculating the likelihood, if the proposed model can be

rejected based on the prior alone.

logPfuns={logprior loglike} is faster but equivalent to

logPfuns={@(m)logprior(m)+loglike(m)}

TIP: if you aim to analyze the entire set of ensemble members as a single

sample from the distribution then you may collapse output models-matrix

thus: models=models(:,:); This will reshape the MxWxT matrix into a

Mx(W*T)-matrix while preserving the order.

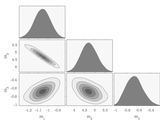

EXAMPLE: Here we sample a multivariate normal distribution.

%define problem:

mu = [5;-3;6];

C = [.5 -.4 0;-.4 .5 0; 0 0 1];

iC=pinv(C);

logPfuns={@(m)-0.5*sum((m-mu)'*iC*(m-mu))}

%make a set of starting points for the entire ensemble of walkers

minit=randn(length(mu),length(mu)*2);

%Apply the MCMC hammer

[models,logP]=gwmcmc(minit,logPfuns,100000);

models(:,:,1:floor(size(models,3)*.2))=[]; %remove 20% as burn-in

models=models(:,:)'; %reshape matrix to collapse the ensemble member dimension

scatter(models(:,1),models(:,2))

prctile(models,[5 50 95])

References:

Goodman & Weare (2010), Ensemble Samplers With Affine Invariance, Comm. App. Math. Comp. Sci., Vol. 5, No. 1, 65–80

Foreman-Mackey, Hogg, Lang, Goodman (2013), emcee: The MCMC Hammer, arXiv:1202.3665

WebPage: https://github.com/grinsted/gwmcmc

-Aslak Grinsted 2015

Cite As

Aslak Grinsted (2026). Ensemble MCMC sampler (https://github.com/grinsted/gwmcmc), GitHub. Retrieved .

MATLAB Release Compatibility

Platform Compatibility

Windows macOS LinuxCategories

- AI and Statistics > Statistics and Machine Learning Toolbox > Dimensionality Reduction and Feature Extraction >

Tags

Acknowledgements

Inspired by: 2D Bandwidth Estimator for KDE, Markov Chain Monte Carlo sampling of posterior distribution, Cornerplot

Inspired: Markov Chain Monte Carlo sampling of posterior distribution

Discover Live Editor

Create scripts with code, output, and formatted text in a single executable document.

Versions that use the GitHub default branch cannot be downloaded

| Version | Published | Release Notes | |

|---|---|---|---|

| 1.8.0.0 | changed title

|

|

|

| 1.7.0.0 | Adding FEX description yet again. |

|

|

| 1.6.0.0 | Updated title, and

|

|

|

| 1.5.0.0 | updated published html examples, and FEX description |

|

|

| 1.3.0.0 | Changed title |

|

|

| 1.2.0.0 | Moved to github |

|

|

| 1.1.0.0 | updated help description and typo in example. |

|

|

| 1.0.0.0 |

|