Scenario Generation

In automated driving applications, scenario generation is the process of building virtual scenarios from real-world vehicle data recorded from global positioning system (GPS), inertial measurement unit (IMU), camera, and lidar sensors. Automated Driving Toolbox™ provides functions and tools to automate scenario generation process. You can preprocess sensor data, extract roads, localize actors, and get actor trajectories to create an accurate digital twin of a real-world scenario. Simulate the generated scenario and test your automated driving algorithms against real-world data.

To generate scenarios from recorded sensor data, download the Scenario Builder for Automated Driving Toolbox support package from the Add-On Explorer. For more information on downloading add-ons, see Get and Manage Add-Ons.

Functions

getMapROI | Geographic bounding box coordinates from GPS data (Since R2022b) |

roadprops | Extract road properties from road network file or map data (Since R2022b) |

selectActorRoads | Extract properties of roads in path of actor (Since R2022b) |

updateLaneSpec | Update lane specifications using sensor detections (Since R2022b) |

actorprops | Generate actor properties from track list (Since R2022b) |

actorTracklist | Store recorded actor track list data with timestamps (Since R2023a) |

laneData | Store recorded lane boundary data with timestamps (Since R2023a) |

laneBoundaryTracker | Track lane boundaries (Since R2023a) |

laneBoundaryDetector | Detector for lane boundaries in images (Since R2023a) |

roadrunnerLaneInfo | Generate lane information in RoadRunner HD Map format from lane boundary points (Since R2023a) |

roadrunnerStaticObjectInfo | Generate static object and sign information in RoadRunner HD Map format (Since R2023a) |

Topics

- Overview of Scenario Generation from Recorded Sensor Data

Learn the basics of generating scenarios from recorded sensor data.

- Preprocess Lane Detections for Scenario Generation

Format lane detection data to update lane specifications for scenario generation.

- Smooth GPS Waypoints for Ego Localization

Create jitter-limited ego trajectory by smoothing GPS and IMU sensor data.

- Generate RoadRunner Scene Using Labeled Camera Images and GPS Data

Generate RoadRunner scene using labeled camera images and GPS data.

- Generate Scenario from Actor Track List and GPS Data

Generate ASAM OpenSCENARIO® v1.0 file using recorded actor tracklist and GPS data.

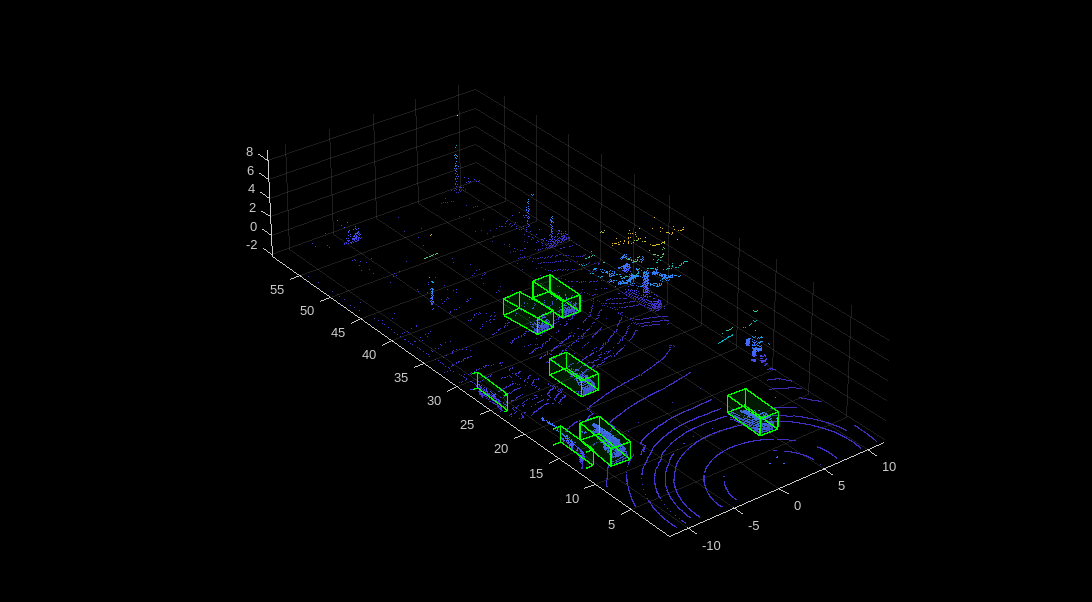

- Generate RoadRunner Scene from Recorded Lidar Data

Generate RoadRunner HD map from recorded lidar data using pretrained deep learning model.

- Generate High Definition Scene from Lane Detections and OpenStreetMap

Generate HD road scene using recorded lane detections, GPS data, and OpenStreetMap® data.

- Extract Vehicle Track List from Recorded Camera Data for Scenario Generation

Extract actor track list from raw camera data for scenario generation.

- Extract 3D Vehicle Information from Recorded Monocular Camera Data for Scenario Generation

Extract 3D vehicle information from recorded monocular camera data for scenario generation.

- Extract Key Scenario Events from Recorded Sensor Data

Extract key scenario events from recorded sensor data.

- Generate RoadRunner Scene with Traffic Signs Using Recorded Sensor Data

Generate RoadRunner scene with traffic signs using recorded sensor data.

- Generate RoadRunner Scene Using Aerial Lidar Data

Generate RoadRunner scenario from aerial lidar data.