Send Deep Learning Batch Job to Cluster

This example shows how to train a deep neural network on a cluster while you continue working in MATLAB® or when you close it.

Training a deep learning network often takes hours or days. However, you can speed up your deep learning applications by using one or more high-performance GPUs or CPUs in a cluster. To use time efficiently, you can train a network as a batch job and fetch the results from the cluster when they are ready. You can continue working in MATLAB while computations take place or close MATLAB and obtain the results later.

This example trains a network on a cluster using different computational resources and compares the training speed. Use this example to learn how to make good use of your cluster and determine how suitable your cluster is for deep learning workflows. You can run this example on an on-premises cluster or a cloud cluster.

Develop Your Training Code

Start by prototyping your training code on your local machine. It is good practice to make sure that your code runs and that training is converging before deploying your code to a cluster.

This example trains a convolutional neural network to classify images using the CIFAR-10 data set. The clusterBench function, attached to this example as a supporting file, performs these steps:

Creates an augmented image datastore to augment the training images. How the cluster workers access the training data is discussed in a later section.

Defines the network architecture. The network includes three convolutional blocks, each containing eight convolutional layers.

Specifies the training options. Use an output function that stops training after a specified amount of time elapses.

Loops over different

ExecutionEnvironmentoptions, training the network for a short time using each option.For each execution environment, records the number of epochs trained for, the time elapsed, and the accuracy of the network on a test data set.

Returns a

structas output containing these metrics and the network with the highest accuracy.

The clusterBench function takes two inputs. The first input is required and specifies how many minutes to train the network on each execution environment. The second input is optional and specifies the mini-batch size used for training. If you do not specify a mini-batch size, the function uses a default value of 256.

When the function trains a network in parallel, the function increases the mini-batch size and learning rate proportionally to the number of workers. For example, if you are training using eight workers, the function multiplies the mini-batch size and learning rate by eight.

Access Cluster

If you have access to a cluster, discover the cluster on your network by selecting Parallel > Discover Clusters in the Environment section of the Home tab. If you do not have access to a cluster, you can create a cluster in the cloud directly from the MATLAB desktop. For more information, see Create and Discover Clusters.

This selection opens the Discover Clusters dialog box, where you can search for MATLAB Parallel Server™ clusters.

If your cluster uses MATLAB Job Scheduler, Microsoft® Windows® HPC server, or another third-party scheduler cluster, select On your network. This selection opens a new window that lists clusters as they are discovered. The list also includes any clusters that already have profiles. For more information about discovering clusters and using cluster profiles, see Discover Clusters and Use Cluster Profiles (Parallel Computing Toolbox).

Access a cluster using the parcluster function. This example uses a cluster called myGPUCluster with eight workers, each with an AMD EPYC 7R32 CPU and an NVIDIA® A10G GPU. Use the name of your own cluster profile instead.

cluster = parcluster("myGPUCluster");You can query the number of available workers on the cluster.

cluster.NumWorkers

ans = 8

Provide Data to Cluster Workers

Depending on your cluster, there might be several options for providing training data to your cluster workers. If your data set is large, you should consult your cluster administrator for advice.

If your cluster has a shared network file system, then this is the simplest method for sharing data as workers can directly access the data.

You can use cloud storage, which is particularly useful if you are using a cloud cluster for training. Cloud storage can be convenient but accessing training data from remote storage can slow down network training.

You can copy data training to each worker. This ensures that cluster workers have local access to the training data, which improves training speed, but creating multiple copies of the data might not be appropriate if your data set is large.

In this example, you specify the data to send to each worker using the AttachedFiles option of the batch function. For more information about using a network file system of cloud storage with your cluster, see Share Code with Workers (Parallel Computing Toolbox) and Work with Deep Learning Data in the Cloud.

When you use the AttachedFiles option, the software copies the files to a temporary location on the cluster workers and deletes them after the code is run.

After the software copies the data to the workers, the path to that data might not be the same in each worker. To allow the data to be processed in parallel across multiple workers in the cluster:

Determine the path to the data on each worker by calling the

getAttachedFilesFolderfunction on each worker.Create a datastore and specify the paths using the

AlternateFileSystemRootsargument.

To see how the function determines the path and creates a datastore, open this example as a live script. Then, open the clusterBench function that is attached to this example as a supporting file.

Before sending the job to the cluster, first download the CIFAR-10 data set to your local machine. To download the CIFAR-10 data set, use the downloadCIFARToFolders function, attached to this example as a supporting file. This code downloads the data set to your current directory. If you already have a local copy of CIFAR-10, then you can skip this section.

directory = pwd; [locationCifar10Train,locationCifar10Test] = downloadCIFARToFolders(directory);

The folder cifar10 already exists in the given directory.

Send Job to Cluster

To run the function on the cluster, use the batch function and specify these options:

Choose the cluster and the function to run on the cluster.

Specify the number of outputs expected from the function. In this example, the function returns a single structure of results.

Specify the number of cluster workers to use to run the parallel training. Because the pool requires

Nworkers in addition to the worker running the job, the cluster must have at leastN+1workers available.Attach the

cifar10folder to transfer it to the workers.

The job will take just over 5*maxTimeMinutes to run. If your cluster is not able to use certain execution environments, the function skips those execution environments and the job will complete faster. For example, if the head node has only a single GPU, the job skips "multi-gpu" execution environment.

numOutputs = 1;

numWorkers = 7;

maxTimeMinutes = 15;

minibatchsize = 256;

job = batch(cluster,@clusterBench,numOutputs,{maxTimeMinutes,minibatchsize},Pool=numWorkers,AttachedFiles="cifar10");You can continue working in MATLAB while computations take place.

Monitor Training Progress

You can see the current status of your job in the cluster by checking the Job Monitor. In the Environment section on the Home tab, select Parallel > Monitor Jobs to open the Job Monitor.

You can optionally monitor the progress of training in detail by sending data from the workers running the batch jobs to the MATLAB client. For an example that shows how to plot training progress when training a network in a batch job, see Use batch to Train Multiple Deep Learning Networks.

Fetch Results

After submitting jobs to the cluster, you can continue working in MATLAB while computations take place. If the rest of your code depends on the completion of a job, block MATLAB by using the wait command. In this case, wait for the job to finish.

wait(job)

After the job finishes, use fetchOutputs on the job object. Alternatively, you can retrieve results from the job monitor. In the Environment section on the Home tab, select Parallel > Monitor Jobs to open the Job Monitor. Then right-click a job to display the context menu and selecting Fetch Outputs.

results = fetchOutputs(job);

results = results{1};If you close MATLAB, you can still recover the jobs in the cluster to fetch the results either while the computation takes place or after the computation completes. Before closing MATLAB, make a note of the job ID and then retrieve the job later using the findJob (Parallel Computing Toolbox) function.

Plot Results

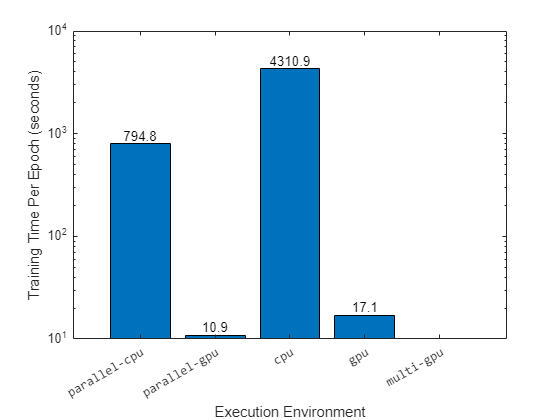

If your cluster does not have access to GPUs, the job skips training using the "gpu", "multi-gpu", and "parallel-gpu" execution environments. If your cluster only has access to one GPU per node, the job skips training using the "multi-gpu" execution environment. Query which execution environments were not timed.

skipped = results.skipped; if isempty(skipped) disp("Skipped execution environments: none") else disp("Skipped execution environments: ") disp(skipped) end

Skipped execution environments:

multi-gpu

Plot the training time for each execution environment, normalized by the number of epochs.

accuracy = results.accuracy; trainingTime = results.trainingTime; trainingEpochs = results.trainingEpochs; executionEnvironments = results.executionEnvironments; executionEnvironments = "\fontname{monospace}" + executionEnvironments; figure b = bar(executionEnvironments,trainingTime./trainingEpochs); b(1).Labels = round(b(1).YData,1); xlabel("Execution Environment") ylabel("Training Time Per Epoch (seconds)") yscale("log")

Retrieve the network with the highest accuracy.

net = results.net

net =

dlnetwork with properties:

Layers: [78×1 nnet.cnn.layer.Layer]

Connections: [77×2 table]

Learnables: [98×3 table]

State: [48×3 table]

InputNames: {'imageinput'}

OutputNames: {'softmax'}

Initialized: 1

View summary with summary.

After you retrieve all of the required outputs, delete the job. Deleting the job removes it from the MATLAB session and from the cluster's JobStorageLocation.

delete(job)

Run Benchmark and Export Results

To run this code and export the results to PDF, call the export function in the command window.

export("clusterBench.mlx","benchmarkResults.pdf",Run=true);

See Also

batch (Parallel Computing Toolbox) | parcluster (Parallel Computing Toolbox) | trainnet