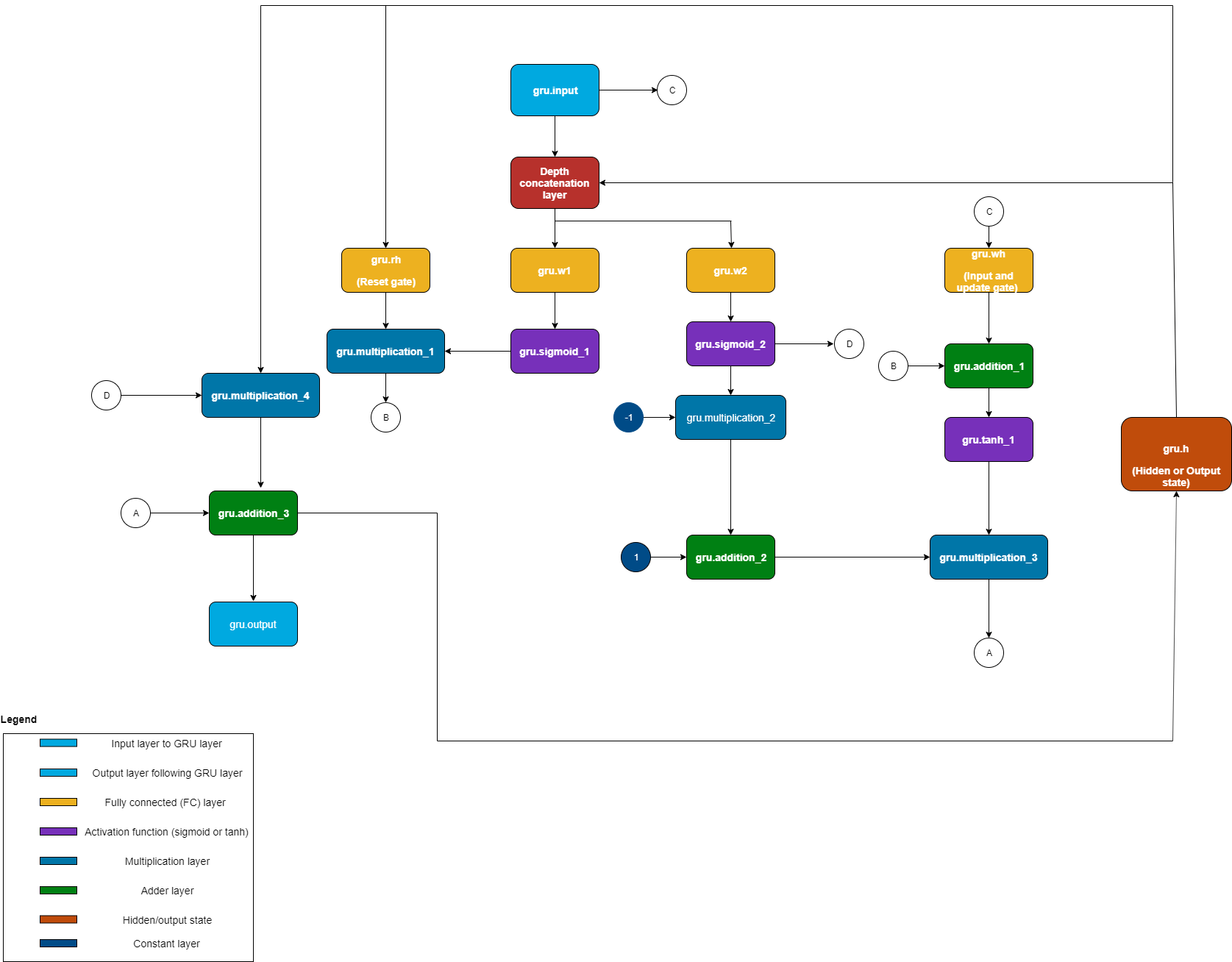

How Deep Learning HDL Toolbox Compiles the GRU Layer

To manually deploy a gated recurrent unit (GRU) layer network to your target board, learn

how the compile method of

the dlhdl.Workflow object interprets the GRU layer in a network. When you

compile GRU layers, Deep Learning HDL Toolbox™ splits the GRU layer into components, generates instructions and memory offsets

for those components.

The compile method of the dlhdl.Workflow translates the:

Reset gate into

gru.rhInput and update gate into

gru.wh

Then, the compile method :

Inserts a depth concatenation layer between the layer preceding the GRU layer and the gates of the GRU layer.

Generates sigmoid, hyperbolic tangent, multiplication, and addition layers to replace the mathematical operations of the GRU layer.

When the network has multiple stacked GRU layers, the compile method uses

the GRU layer name when generating the translated instructions. For example, if the network

has three stacked GRU layers named gru_1, gru_2, and

gru_3, the output of the compile method is

gru_1.wh, gru_1.rh, gru_2.wh,

gru_2.rh, and so on. The compiler schedules the different components of

the GRU layer, such as fully connected layers, sigmoid blocks, tanh blocks, and so on, into

different kernels in the deep learning processor architecture.

This image shows how the compile method translates the GRU layer:

To see the output of the compile method for a GRU layer network, see

Run Sequence Forecasting Using a GRU Layer on an FPGA.