Developing Sensor Fusion and Perception Algorithms for Autonomous Landing of Unmanned Aircraft in Urban Environments

By Paolo Veneruso, Roberto Opromolla, and Giancarmine Fasano, University of Naples Federico II

Research interest in autonomous flight operations within urban areas is growing as the societal benefits and business potential of such operations becomes clearer. Delivering critical medical supplies is just one of the many possible applications for autonomous aircraft capable of vertical takeoff and landing (VTOL) in urban air mobility scenarios.

However, a number of design challenges need to be solved before quadrotors and other VTOL aircraft are fully capable of operating autonomously in urban environments. The approach and landing phases of autonomous flight are particularly difficult because the urban landscape includes many potential obstacles that can block or interfere with the global navigation satellite system (GNSS) signals used to position and navigate the aircraft. Landing challenges are further compounded in low-visibility conditions—such as fog, rain, or night—that make it challenging to rely only on visual data from onboard daylight cameras.

When the landing pad is clearly visible, autonomous algorithms can employ a variety of well-established computer vision techniques for pose estimation—which is a determination of the aircraft’s approximate position and orientation—to support navigation during approach and landing (Figure 1). Our research group at the University of Naples is focused on extending the reliability of these techniques under low-visibility conditions in which visual sensors are impaired. To do this, we have developed a set of perception algorithms in MATLAB® that apply an extended Kalman filter (EKF) to integrate input from multiple sensors, including the aircraft’s visual system, inertial measurement unit (IMU), and GNSS receiver. We used data generated via simulations conducted with Simulink® and Unreal Engine® to develop, refine, and validate our algorithms. In addition to producing reliable pose estimates under low-visibility conditions, our algorithms are also capable of crosschecking the integrity of GNSS measurements, which may become unreliable at low altitudes.

Figure 1. A landing pad equipped with an AprilTag marker to facilitate an autonomous aircraft’s pose estimation.

Generating Simulation Data with Simulink

It is possible to pursue an experimental approach collecting visual, IMU, and GNSS data from real-world flight tests, and we are using customized small drones to support our research. However, experimental tests cover a limited set of conditions and pose challenges, such as those associated with repeatability and control of visibility conditions. Further, there are practical issues such as the fact that the testing area is located in a wide-open area with very few of the obstacles common to urban environments. Thus, simulations with Simulink and Unreal Engine—which are easier to design and control, can include a wide variety of system parameters, and are better representative of real-world urban environments—play a key role in supporting design, development, and testing of our solutions.

We based the model for our simulations on the UAV Simple Flight model from UAV toolbox (Figure 2). The model includes a Simulation 3D Scene Configuration block, which we used to configure the scene in Unreal Engine. We chose the scene of the Portland heliport and its surroundings. It also includes a Simulation 3D UAV Vehicle block, which we used to define the quadrotor’s parameters and its trajectory, and a Simulation 3D Camera block that let us to specify the mounting position and parameters of the monocular camera used to capture simulation data.

Figure 2. A Simulink model used to generate camera data during approach and landing.

We ran extensive simulations with this model under a variety of visibility conditions, wind conditions, and landing scenarios (Figure 3). During the simulations, we captured camera images from Unreal Engine and collected synthetic IMU and GNSS data from the Simulink model.

Figure 3. (Left) A simulated view of the Portland heliport as seen during a landing approach and (right) the same view obscured by fog.

Developing Sensor Fusion Algorithms in MATLAB

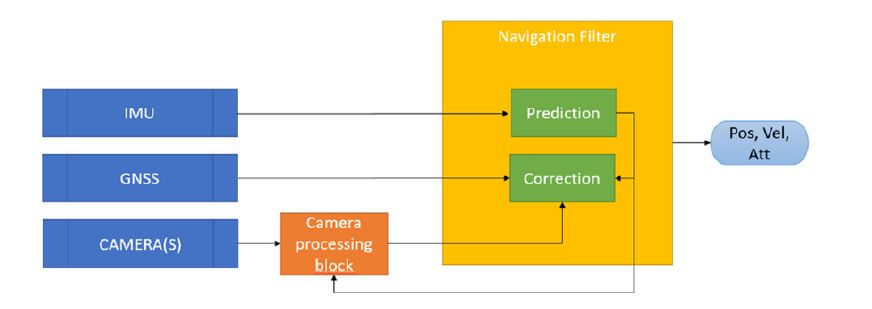

To explore the boundaries and feasibility of approach and landing operations, we designed a vision-aided, multisensor-based navigation architecture that integrates input from the aircraft’s IMU, GNSS receiver, and camera (Figure 4). Within this architecture, we used MATLAB to implement algorithms for a multimodal data fusion pipeline based on an EKF. The algorithms estimate the position, velocity, and attitude of the aircraft based on the sensor data that we generated via simulations with Simulink and Unreal Engine.

Figure 4. An architecture for estimating aircraft position, velocity, and attitude from multiple sensors

At the start of the approach, when the landing area is all but imperceptible via the camera, our algorithms rely more heavily on GNSS measurements. Closer to landing, the algorithms shift their emphasis to camera input, which provides the submeter accuracy required to land the aircraft on target. For this part of the process, when the landing pattern is realized with AprilTag markers, the algorithms invoke the readAprilTag function from Computer Vision Toolbox™ to detect and identify the markers in the camera images (see Figure 1). In case other landing patterns are adopted—for example, ad hoc light patterns for night operations—custom MATLAB functions are exploited. Throughout the approach and landing, the algorithms use input from the IMU to compensate for temporary dropouts from the other two sensors.

Recently, we have also implemented internal crosschecking in the algorithms to assess the reliability of the input being received from the camera in low-visibility conditions, as well as support strategies for self-estimating the resulting navigation performance degradation.

Ongoing Developments

Our group is actively pursuing several research opportunities stemming from our work on autonomous vertical approach and landing procedures in urban environments. For example, we are exploring ways to improve the landing areas to better support autonomous operations. Possible improvements include changes to the size, number, and positioning of visual markers, as well as changes to the light configurations and patterns used to delimit and illuminate the landing area.

We are also planning to integrate additional sensor modalities into our algorithm pipeline, including radar and lidar, as well as machine learning algorithms to assist with object detection in camera images. Further, we plan to combine into one process the generation and subsequent processing of sensor data, which are currently implemented as two separate processes. Currently this separation accelerates the tuning of thresholds and other parameters in our algorithms because we do not generate new sensor data each time. Closing this loop by applying our algorithms to simulated data as they are generated, however, will make it easier to implement closed-loop landing guidance and for other research teams to use our algorithms as they design, model, and simulate higher level guidance or path-following algorithms with Simulink and UAV Toolbox.

Lastly, we are continuing to integrate our work with MATLAB, Simulink, and Unreal Engine into educational activities at the University of Naples Federico II, including in courses on unmanned aircraft systems, autonomous aircraft design, and an onboard systems laboratory. These courses are offered at the bachelor’s and master’s level in aerospace engineering and autonomous vehicle engineering.

Acknowledgments

This research is carried out in collaboration with Collins Aerospace. The authors gratefully acknowledge Carlo Tiana and Giacomo Gentile for their contributions to this work.

Published 2022